By investing in peer review, the receiving organisation will achieve a well-rounded understanding of how their work is perceived.

Ambition and Quality Investment Principle and Peer Review

Peer review has long been encouraged by Arts Council England (ACE) across their funded organisations, demonstrated by their own Artistic and Quality Assessment programme[1] and their inclusion of peer review in previous projects using the Culture Counts evaluation platform (QMNT, 2015-16; Toolkit, 2019-23). But now, when peer review isn’t mandated by a funder, it might feel easier to let it slip by the wayside. However, when considering the Ambition and Quality Investment Principle (IP), it’s clear that peer review is still highly valued by ACE and is deemed essential in all other sectors too.

In their published literature on the Ambition and Quality IP, ACE states:

We want a sector that develops creative ambitions and improves the quality of work by listening to the views of people inside and outside its immediate circle.

[…]

You will seek to understand, reflect and respond to the views of audiences, participants, co-creators, customers, peers, staff, and other stakeholders. These external views can inform the way you work and provide you with sound evidence on which to base decisions about your activity.[2]

By having evidence on the extent to which your ambitions are being met by a variety of stakeholders, you can learn how their position affects their experience of your work and, in turn, whether your work should be further developed, to have the desired effect.

So, how does the Impact & Insight Toolkit support this?

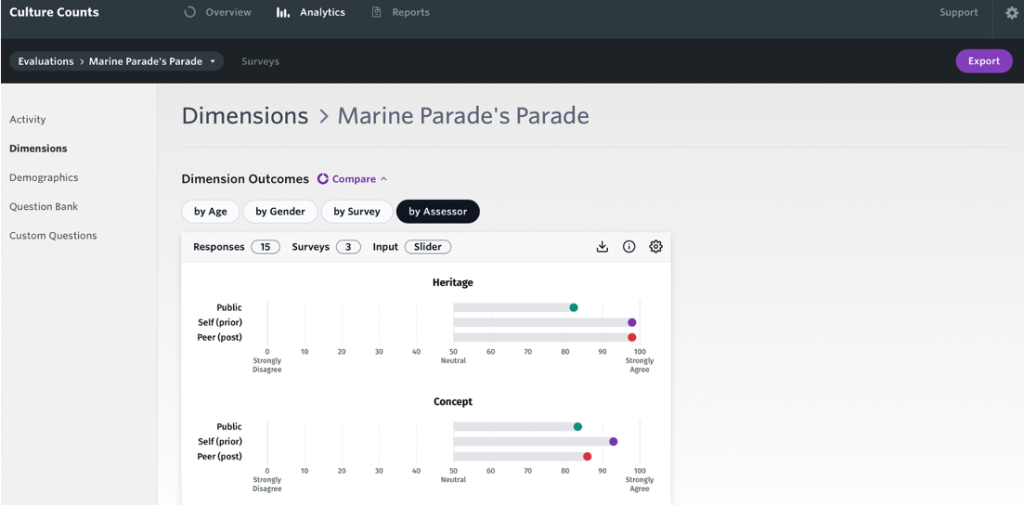

Firstly, the evaluation platform utilised in the project, Culture Counts, has ‘triangulation’ as a feature. Triangulation is the division of all respondents into three categories: self assessment, peer review, and public response. Where dimension questions[3] are used, the results across these three respondent categories can be compared. This helps you to understand whether the work has been experienced similarly across the different groups, or whether there is disparity.

These different respondent categories have their own individual purposes, as well as the triangulation which comes when they’re combined:

- Prior self assessment – A prior self assessment takes place before the work occurs. It is an opportunity for the creators, curators and facilitators to express their expectations for the work in a measurable fashion. This creates a sort of ‘baseline’ for responses to be compared to. For another layer of insight, a user can opt to also conduct a ‘post self assessment’, where the same creators, curators and facilitators are encouraged to reflect on the work that occurred in a measurable fashion.

- Post peer review – Someone that can offer a professional perspective on your work can be a peer reviewer. Peer review provides another angle to understanding the outcomes of a work. It enables the Toolkit user to see how their work is perceived by a professional and to receive constructive suggestions for improvements and insights into how their work might ‘fit’ into the wider cultural sector. Some users also choose to conduct a ‘prior peer review’ so that they can learn whether the peer reviewers’ expectations were met in a measurable fashion.

- Public response – Audience members, participants or visitors can complete a survey to inform the organisation of their experience and perception of the work. When using dimensions, this is measurable and can be compared with the:

- Self assessors’ and peer reviewers’ perspectives

- Responses from the organisation’s previous surveys using the same questions

- Responses to other organisations’ surveys where the same questions were used

A screenshot of the Analytics Dashboard, showing triangulation in an erroneous evaluation

A screenshot of the Analytics Dashboard, showing triangulation in an erroneous evaluation

A couple of insights that have been achieved through the triangulation aspect of the Toolkit being used are:

- The expectations shown in the prior self assessment were reflected in post peer review, but not in the public response. This could indicate that the evaluated work is not ‘landing’ with the public as expected. Why might this be? Well, it could be that there is an accessibility issue and that there is perhaps a barrier in knowledge or experience which is preventing the work from having the desired impact on the public. Once there is some evidence of this, it can be addressed if necessary.

- The dimension results reflected the same ‘shape’ across all three respondent categories (e.g., even if the numbers scored are different, the three respondent categories might show the same dimensions as scoring most highly). This might indicate that, although the extent to which an outcome is experienced by a respondent may differ, the same outcomes are experienced more fully than others.

To be clear, it isn’t innately a good or bad thing when there is alignment across all respondent categories. Only you can know what the hopes and expectations would be. However, if you know what you would expect to see, and if that is reflected in the data presented, you can feel assured that your practice is having the desired effect.

Secondly, Culture Counts has an inbuilt Peer Matching Resource, available to those participating in the Toolkit project. What is this Resource? Well, it is essentially a database, containing information of over 700 arts and culture professionals across England who have agreed to be contacted by Toolkit participants with invitations to experience their work and provide a review. This gives the opportunity for Toolkit participants to expand their professional networks and obtain peer reviews beyond their immediate circle. However, if a Toolkit user feels that they would value a peer review from someone that is within their circle, they are able to do this without using the Peer Matching Resource. The ability to invite someone to complete a peer survey outside of the Peer Matching Resource is also inbuilt to Culture Counts.

Thirdly, we have guidance available on our website, covering: Principles of Peer Review; Practicalities of Peer Review; Becoming a Peer Reviewer. If you have any questions on what peer review is, how it works and why you would want to engage in this area of work, please do take a read of the guidance.

Words from your Peers

We have been fortunate enough to speak with users of Culture Counts about their experience of peer review and why they have chosen to participate in this area of work. Please see below a few words from the case studies of Right Up Our Street, Coney, and Royal Liverpool Philharmonic.

Right Up Our Street

Peer reflection enables us to give people a voice that we work with, in a partnership sense and capturing their thoughts, because we couldn’t grow without knowing what they thought of the project. There is a really big thing about people feeling valued when they’re asked to comment on a project. It’s all part of showing the bigger picture.

Coney

Coney used the peer assessment process to strategically build an impression about our work from the broadest possible influence. […] It placed Coney’s work within a wider cultural debate; understanding from those exceptional people in other sectors what it is about our work that is interesting, what resonates and what is translating as cultural excellence across the broadest possible scale.

Royal Liverpool Philharmonic

Whilst it’s good to be ambitious, it was great for our artistic team to have some external peers come along and evaluate what we are doing and give their impressions.

As always, thank you to everyone that offers their time and words to help us establish case studies, sharing experiences and supporting other users.

Closing Thoughts

It is fully appreciated that embedding peer review in evaluation practice adds another ‘thing’ on the permanently cluttered ‘to do’ list. However, by investing in peer review, the receiving organisation will achieve a well-rounded understanding of how their work is perceived. Beyond the use of dimensions, the well-considered questions contained within peer surveys, available in the evaluation templates, provide the opportunity for important semi-structured feedback. The responses from the combination of dimension questions and other templated questions are visible in the Analytics Dashboard[4], Reporting Dashboard[5]and the downloadable raw data. This data should feed into conversations with senior management and board members, informing future developments to your programme and offer. In turn, this supports you to further your fulfilment of your organisation’s ambitions, ensuring high quality experiences.

Featured image credit – Anastasia Maksimova @ aisvri on Unsplash

[1] https://www.artscouncil.org.uk/supporting-arts-museums-and-libraries/artistic-and-quality-assessment

[2] https://www.artscouncil.org.uk/blog/essential-read-ambition-quality

[3] https://impactandinsight.co.uk/demystifying-dimensions/

[4] https://impactandinsight.co.uk/resource/analytics-dashboard/

[5] https://impactandinsight.co.uk/reporting-ondemand/