Many thanks to Right Up Our Street, part of the Creative People and Places programme, for sharing their experiences and insights with us. In particular, we would like to thank Lizzy Hewitt for giving up their time to participate in this evaluation case study.

This case study is presented in the format of questions and answers, with a key suggestion from Counting What Counts (CWC), guided by the responses to the questions posed. We would strongly encourage you to read the full case study, as the detail provided by Right Up Our Street is invaluable. But, if necessary, you can scroll to the end to simply read CWC’s Suggestions.

Introduction

Right Up Our Street is part of Arts Council England’s Creative People and Places (CPP) programme. Based in Doncaster, Right Up Our Street is community-led and ‘works in partnership with Doncaster’s communities, listening to what they need and want, and co-creating an arts programme that is relevant and meaningful’. Working with a great variety of people, embracing a great variety of artforms, Right Up Our Street makes a great variety of inspiring art and activities.

Being a CPP means that there is a mandated aspect to Right Up Our Street’s participation in the Impact & Insight Toolkit project. CPPs need to conduct 4 evaluations per year, using a choice of two question sets, and to submit the corresponding Insights Reports to Arts Council[1].

We were very fortunate and thankful to have the opportunity to speak with Lizzy from Right Up Our Street, to listen to their experiences and to share their learnings with you.

Faces of Balby Bridge by Jamie Bubb. Photo Credit: Sally Lockey

Faces of Balby Bridge by Jamie Bubb. Photo Credit: Sally Lockey

As a CPP, you need to conduct 4 evaluations per year; how have you decided which of your works to evaluate with the Toolkit?

We have three strands of work in our business plan: Doncaster’s Festival of Light, Priority Communities, and 21 Wards. So, we purposefully pick a project to evaluate that fits each of those strands to go along with the business plan. Also, I think something we like to consider is the artforms we’re representing; the selection has got to be a representative sample of what we’re doing and who we’re reaching. Furthermore, it’s nice to have a contrast of artist-led events, and works that have been co-created with the community. Therefore, it might look like a bit of a ‘pick and mix’ every year, but it is something we’ve really considered. It’s well thought out and we want representation of our programme.

Regarding the Festival of Light…

We’ve done two years of Impact & Insight Toolkit evaluation with the same questions which is so helpful to us because we can reflect on that directly through the dimensions and consider how people are feeling.

CWC’s takeaway and/or suggestion

Use your organisation’s business plan, priorities and structure to help you choose what to evaluate.

How have you collected the data?

In terms of collecting data, we do a combination of using the interview, display and online links. Sometimes we’ve done paper if needed, as well as using iPads and QR codes on site. This combination has worked well for us.

Different types of works will lend themselves to different methods of data collection, dependent on a variety of factors including: demographic of audiences, location of work, whether the work is ticketed… There is nothing to stop you from using multiple delivery methods in your surveys.

CWC’s takeaway and/or suggestion

Use more than one method of survey delivery to maximise responses.

Poetry Takeaway photo. Photo credit: Sally Lockey

Poetry Takeaway photo. Photo credit: Sally Lockey

What challenges have you experienced when using the Toolkit?

The mandated use of the dimension Relevance – It had something to say about modern society has been the biggest challenge for us and, in terms of overcoming that, I don’t think we necessarily have found a way. This might just be an ‘us’ thing, and not CPP-wide, and I totally get that.Looking forward, it would be interesting to maybe discuss or see what other organisations are feeling when it comes to that specific question.

Regarding managing both Toolkit and Illuminate, with any new introduction to software or a new way to do something, there are always going to be these ironing out moments and figuring it out. So that’s the approach we have taken as a team; we are going to do our best in terms of what we can collect. And I think there’s been a lot of grace granted in terms of figuring it out. I’m still learning about Illuminate and it’s not something I’m super clear on, but it’s nice that we feel that we can work together, within our organisation and across the wider network, to figure it out.

CWC’s takeaway and/or suggestion

Engage us in conversation or raise concerns with your Relationship Manager. There might be other organisations feeling similarly, but neither ACE nor CWC will know unless you say. There may also be guidance or a specific resource that ACE or CWC can direct you to for suitable support, if necessary.

As a CPP, it is mandatory to conduct self-prior assessment (expectation setting) and public surveys. However, you have also embraced self-post assessment and peer review. Why did you choose to do this?

Peer review provides an extra level of understanding of how projects have gone, and that’s mainly why we also do the post reflection as self-assessors. It’s just as important that we reflect on the project as much as audience members have. We had our initial intentions and we then need to reflect on how the project went, to grow and see that journey through together. We also will have very different perspectives and it’s interesting to track those different versions of our experiences, despite it being of the same event.

Peer reflection enables us to give people a voice that we work with, in a partnership sense and capturing their thoughts because we couldn’t grow without knowing what they thought of the project. There is a really big thing about people feeling valued when they’re asked to comment on a project. It’s all part of showing the bigger picture to me. Using those extra surveys provided in the templates doesn’t take much to do either. It’s lovely to reflect on.

CWC’s takeaway and/or suggestion

When used in conjunction with prior-work self assessment, post-work self assessment provides space for guided and comparable reflection.

Peer reviewers don’t need to be from the ‘art world’ – you choose who’s best for your organisation and your work.

TREES by Things that go on things. Photo credit: James Mulkeen

TREES by Things that go on things. Photo credit: James Mulkeen

As a CPP, there are two question sets for you to choose from. However, you can add your own questions too. How did you decide on which questions to add to your surveys?

We have a story of change which highlights targets that we want to reach. One of those targets is making people from Doncaster feel proud of where they live. So, the dimension question we have introduced for our larger scale events is…

Pride in Place – It made me feel proud of my local area.

It’s important that we measure this because it aligns perfectly with our target. We need to measure whether our projects are affecting how people think and feel and whether they’re proud of where they’re from. In terms of making changes to additional questions we ask of respondents, we are ‘action learning’[2]so it’s so important that we do make any changes as and when necessary.

CWC’s takeaway and/or suggestion

Use your organisation’s mission statement, theory/story of change, or priorities to guide any decisions around the inclusion of questions.

In addition to the creation and submission of Insights Reports, how have you been using the data collected via Culture Counts?

The evaluations (and Insights Reports) allow us to see if we’re on track with the business plan and the story of change.

As a team, we have quarterly reflections, where we talk about the highs, the lows, the stresses, and the learning of every individual project. This is also helpful from a mindful point of view – ‘putting things to bed’ and being able to say “well, maybe that didn’t work, but that’s okay because we’ve learned from that.” I know the team is always really impressed with the visual element of the Insights Reports, and I honestly feel really proud when seeing our project in that format.

The instant visuals generated by Culture Counts are shared within the organisation via Insights Reports and then the wider public via case studies, facilitated by external evaluators. The case studies are often useful for artists we work with too, particularly to show the impact that their work has had. We’re very transparent, so even though there are people in the room analysing the data, it is not necessarily for us. It’s also for the public because it’s just as much about them as it is about us. Transparency is definitely something that we are keen on; it’s really refreshing to be open in this way, sharing our learning, hence our focus on ‘action learning’. We use that phrase as a comfort; we have to take risks because that’s how we grow.

CWC’s takeaway and/or suggestion

Learn and share your insight.

Don’t be afraid to question the ‘success’ of a project using the data and develop accordingly.

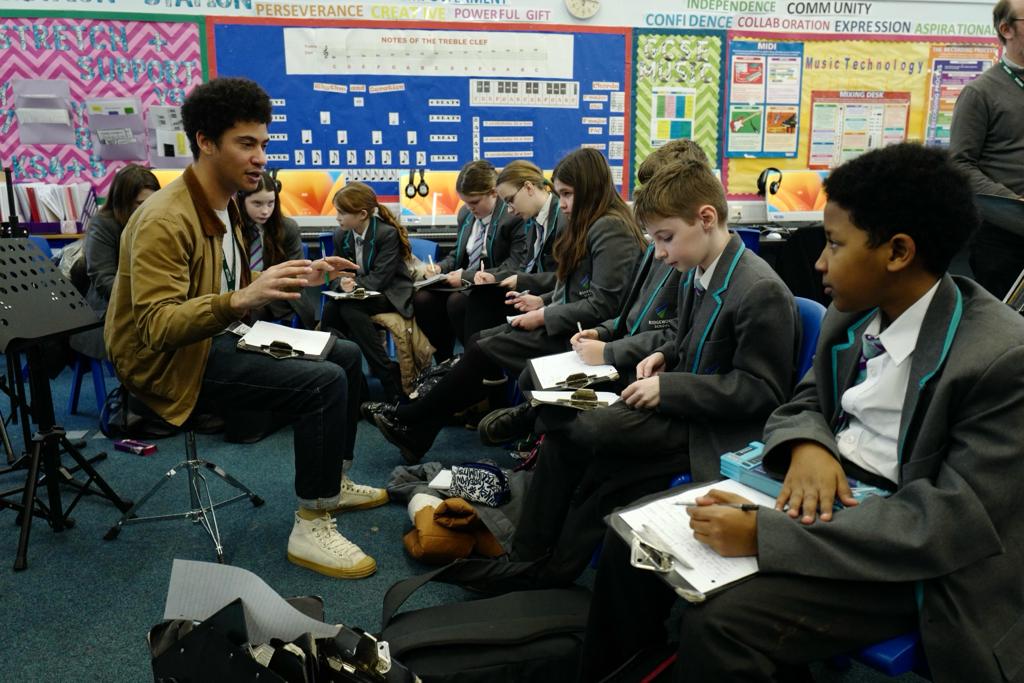

Noise Collective, Artist Skinny Pelembe. Photo credit: Sally Lockey

Noise Collective, Artist Skinny Pelembe. Photo credit: Sally Lockey

What reflections have you achieved from this?

The main thing I’ve learned from the Toolkit approach to evaluation is how important individual voices are. Every individual survey response really does count. We need to recognise that we, peer reviewers, self assessors and members of the public, are all answering these questions to make our place better and to make the programme work, which I think is a beautiful concept.

It’s never been about numbers for us in terms of how many responses we get, but it’s important that we’re getting that quality individual interaction through the surveys, and that’s through peer review and self-assessment as well.

We are here for Doncaster people, and they are getting their say. What we’re delivering is all about what the people want and what they want to do; we’re making sure that we’re creating events that are joyful, and that people want to attend. And so far, from looking at the results, I think we are doing that, which is really uplifting to see. By having all this data in one place, we can prove it. I also love it when people are imaginative with their answers to free text questions. It produces those one-liners where you feel like you’ve made a difference. We offer free events for families and when they’ve totally ‘got’ it, it feels like it’s worthwhile. We’ve made a difference to that family and seeing their comments is a beautiful moment.

CWC’s takeaway and/or suggestion

Dedicate time to ‘sitting’ with the data and reflecting on the projects you’ve evaluated; your experiences of evaluating; and the people you’ve engaged with through the process.

What are your evaluation plans for the 2024-25 financial/evaluation year?

I think the team and I are getting to a place where we feel comfortable with the process of our evaluation. I think we really want to implement a little bit more of an ‘instant evaluation’. So, for the Festival, we used some of the measurement questions with children and encouraged them to place a sticky dot on a scale, rather than doing it digitally. So, we’re still using the dimension questions, but we’re reaching a much younger audience and that was interesting to implement. I would love to do more of that because it works, and we can also track progress across a different demographic.

But, honestly, just more of the same! I think that, particularly while we’re still in this phase, we need to try and keep things consistent and have questions remain the same. I would like to keep doing more evaluations to use it for projects which we don’t intend to ‘submit’ to Arts Council, but for more representation of what our CPP offers. I can only see the Impact & Insight Toolkit getting more useful.

CWC’s takeaway and/or suggestion

Keep going with things you’ve had success with.

Experiment with different approaches to further your evaluation reach.

Closing thoughts from Right Up Our Street

We really make sure that the data we’re collecting, even if it is compulsory, works for us just as much as it works for Arts Council. Sometimes, this involves changing the perspective a little bit – moving from evaluation being something that we have to do to something we want to do. That’s the mindset that I and the team have on it now. It’s such a good thing to be able to collect people’s thoughts and feelings which will, in turn, make our programme stronger. So, make evaluation work for you, just as much as funders or other stakeholders. Then it becomes something that is a joy to be involved with.

Into the Park, TEAM RUOS. Photo credit: David Sanchez

Into the Park, TEAM RUOS. Photo credit: David Sanchez

Counting What Counts’ Suggestions

These suggestions have been compiled through listening to Right Up Our Street and reflecting upon their responses. These suggestions may not be suitable for you or your organisation, but they are certainly worth considering!

| Question | Suggestion(s) |

| How can you decide what to evaluate? | Use your organisation's business plan, priorities and structure to help you choose what to evaluate. |

| Which supported method should you use to collect data? | Use more than one method of survey delivery to maximise responses. |

| How should you manage challenges you’ve experienced when using the Toolkit? | Engage us in conversation or raise concerns with your Relationship Manager. There might be other organisations feeling similarly, but neither ACE nor CWC will know unless you say. There may also be guidance or a specific resource that ACE or CWC can direct you to for suitable support, if necessary. |

| Who is an appropriate peer reviewer? | Peer reviewers don’t need to be from the ‘art world’ – you choose who’s best for your organisation and your work. |

| How do you decide on which questions to add to your surveys? | Use your organisation’s mission statement, theory/story of change, or priorities to guide any decisions around the inclusion of questions. |

| How can you use the data collected? | Learn and share your insight.

Don’t be afraid to question the ‘success’ of a project using the data and develop accordingly. |

| How should you approach reflecting on your results? | Dedicate time to ‘sitting’ with the data and reflecting on the projects you’ve evaluated; your experiences of evaluating; and the people you’ve engaged with through the process. |

Thanks to Lizzy and the team at Right Up Our Street for their valued contribution!

[1] For more information on the mandatory aspects of Toolkit participation for CPPs, please see the corresponding guidance.

[2] https://www.indeed.com/career-advice/career-development/action-learning

Featured image credit – Doncaster Festival of Light – Universal Everything (Photo by David Sanchez)