Introduction

Making the most of the Impact & Insight Toolkit (Toolkit) throughout an organisation will require, more than anything else, conversation. Your results achieved through using the Toolkit can be insightful across the organisation, as long as you know where to start! That’s why a question has been allocated to each department, with the intention of prompting a conversation.

| Department |

Question |

| Board |

Are we meeting our creative mission or charitable objectives? |

| CEO |

What is our creative value? |

| Artistic director |

Are we meeting our creative intentions across the programme? |

| Producer/programmer |

How are peers and audiences responding to our work? |

| Marketing |

How realistic are the expectations we are selling to our audience? |

| Fundraising |

How can I measure and evaluate the impact of our funded work for my stakeholders? |

| Development |

Where is there room for improvement and could there be scope for a funded project or collaboration? |

| Audience development |

How are we performing compared to our peers?

How are our responses impacted for work delivered online? |

| Outreach |

Can we demonstrate our work has relevance to those we reach? |

| Learning & participation |

How does our creative impact change between different sizes and demographics of student groups? |

When used to its full extent, the Toolkit can provide evidence to support discussions focussed on all of these questions.

So, what can you look at to address these questions?

There are certain features within the Toolkit that can help us to delve into these questions:

- Triangulation

- Dimension selection

- Experiences vs. expectations

- Custom questions

- Benchmarking Dashboard

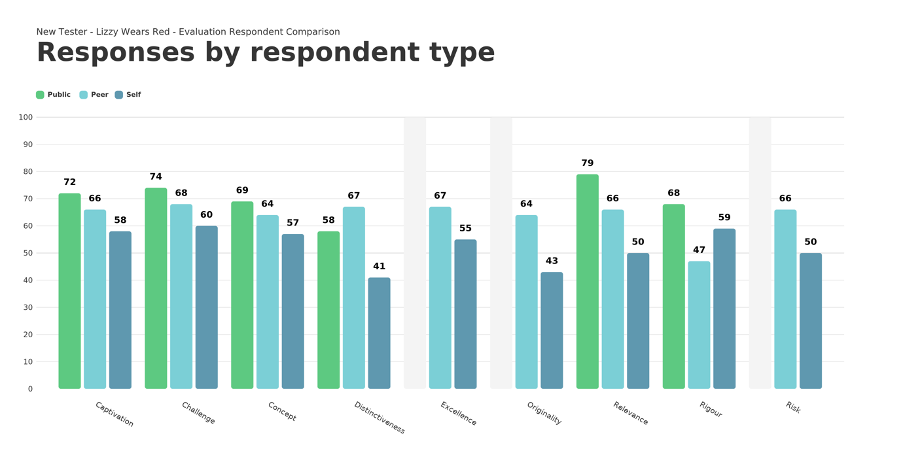

Triangulation

Triangulation is where you take the perceptions of a piece of work by three different ‘groups’ and compare them. In the Toolkit, we use:

- Self-respondents – people that have been directly involved in the curation or creation of the work

- Peer-respondents – people that are able to offer a valuable professional perspective on the piece of work, but are not emotionally invested in it

- Public – people that experience the work as audience members, participants or attendees

Having multiple respondents in each category will enable you to see if they are all experiencing the work in a similar way, or if there are noticeable differences. In the Toolkit, it is commonplace for self-respondents to complete a survey prior to the work’s ‘release’. It can be helpful to know if the various self-respondents have similar expectations for a piece of work, to ensure that the overarching purpose of the work’s programming/production is understood amongst the team.

Dimension selection

Dimensions are standardised statements constructed to capture a certain outcome that have been co-produced with creatives, funders and academics. One example of this would be ‘Captivation: It was absorbing and held my attention’.

There is a set of core dimensions, pre-selected by Arts Council England (ACE), that users of the Toolkit are encouraged to use. Using this set of core dimensions is valuable as it will help researchers, funders and creatives understand how important it is to fund a variety of artistic and cultural works. It can’t be expected that visiting a visual arts exhibition in Manchester city centre will have the same impact as watching a musical concert in a rural village in Kent and, by using a common set of dimensions, this can be explored.

However, users of the Toolkit are also able to select further dimensions to add into their surveys. They are encouraged to explore the dimensions available and select a few that speak to the organisation’s mission as well as the individual work’s purpose. One interesting exercise is to have the dimensions shown and for a selection of team members to individually choose dimensions that they feel are most reflective of the organisation’s mission and also that of the individual work. They can then come together to compare choices and refine both their understanding of the organisation’s mission and use them in their surveys to ensure that this is being received by those that experience it. For instance, if an organisation’s mission was focussed on fostering a sense of pride within a community, they might choose the dimension ‘Place: It made me feel proud of my local area’. If team members didn’t choose this dimension, it would be interesting to understand why that might be and, if this statement was disagreed with by members of the public, it might be that the team would reassess how their intentions can be more successfully communicated with the public through their work.

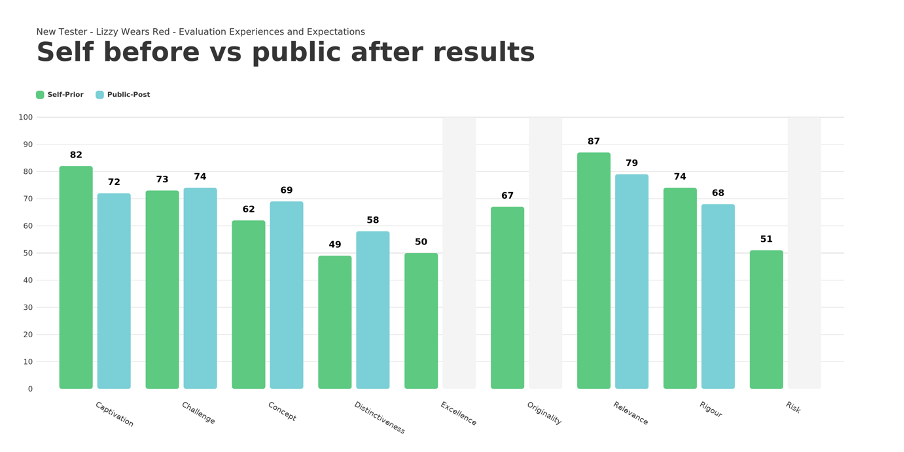

Experiences vs. expectations

In the Toolkit, it is commonplace for self-respondents to complete a survey prior to the work’s ‘release’. In some instances, it is also appropriate for peer-respondents to complete a prior survey. Conducting a prior survey is useful for two reasons:

- To establish understandings about the intentions for the work

- To establish a baseline for post-experience responses to be compared with

The different dimension scores applied to a work before it occurs will present an overall ‘shape’ of expectation. Once the work has occurred, the public’s reaction to the work can also be graphed and have a ‘shape’. The two shapes can then be compared, enabling the organisation to learn the extent to which their expectations for a work were realised. Of course, a prior survey can also be compared with peer responses! This would enable the organisation to learn how their work is received by different categories of respondent.

Custom questions

Whilst dimension statements are clearly a focus of the Toolkit, there are opportunities for you to add in your own questions too! We call these ‘custom questions’ and these can be useful to:

- Collect information required for internal processes or funders’ requirements

- Provide supporting context to dimension results, aiding interpretation

Let’s look a little more at point 2. If you include a question asking whether this was someone’s first time experiencing your work, you will be able to see if the impact of your work varies across respondent ‘type’. For instance, it could be that your result for ‘Challenge: It was thought-provoking’ is higher for first-timers than for seasoned attendees, which indicates that those that have attended before are not finding the work as challenging. If providing challenging work is an important part of the organisation’s mission, it might be that the organisation could consider programming more challenging work for their regular attendees, taking them to the next level. There are, of course, many further questions that could be asked to support interpretation of dimension results, but this is a starting point!

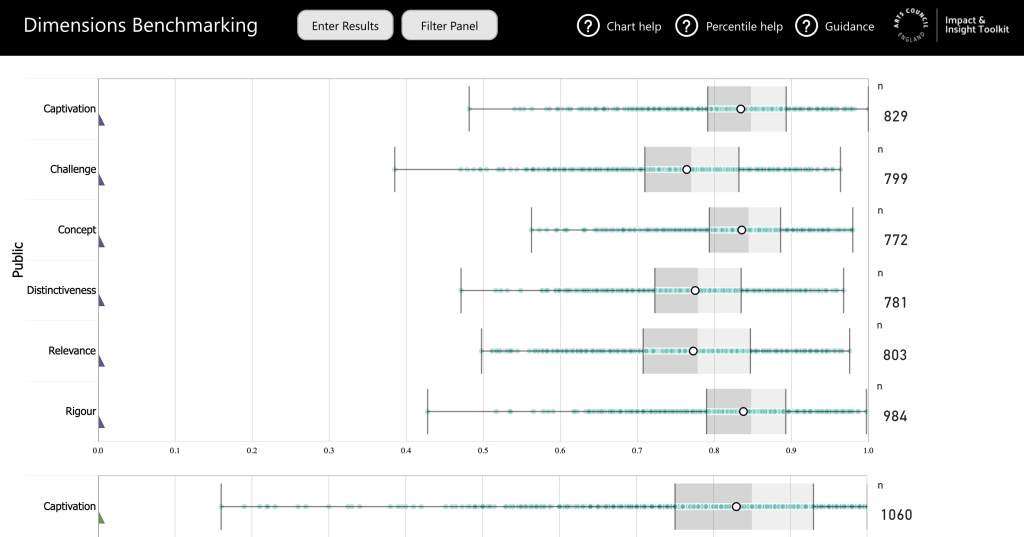

Benchmarking Dashboard

This Dashboard was developed to support users of the Toolkit better understand what their achieved dimension results actually mean in the context of other Toolkit users’ results. The Benchmarking Dashboard allows users to compare their survey results to other Toolkit evaluations, providing artform-specific context for their individual results. For example, they might discover that what they thought is a ‘low score’ for the dimension Originality, is in line with the outputs achieved by other users in their artform. To see more on this, it is recommended that you take a look at the Benchmarking Dashboard guidance. The overarching idea is to enable an organisation offering artistic and cultural experiences to see where their dimension results ‘sit’ amongst similar organisations.

Something that is crucial to remember when interpreting your Toolkit results and using the features to help answer the questions raised is that context matters! Your numerical results are there to kickstart a conversation within your organisation, aiding development and decision-making; they are not there to overpower any qualitative data or context you may have. Anecdotal, observational, qualitative and numerical data are all valuable!

Image credit: Photo by Toa Heftiba on Unsplash