1. Introduction

The Ambition & Quality (A&Q) investment principle has three pillars and emphasises a range of different activities that funded organisations might undertake. This includes things like clearly formulating your ambitions, using tools to measure how people’s experiences of your work links to your intentions, and using different perceptions to inform your planning and delivery.

The Impact & Insight Toolkit (Toolkit) provides Arts Council England (ACE) funded organisations with the evaluation tools an organisation would need to undertake these activities and carry out quality evaluation as described in the A&Q investment principle. This is provided alongside customer support services and guidance from a friendly and experienced team.

The tools include a guided process for selecting quality metrics, the ability to survey different respondent groups, customised surveys for those different groups, evaluation templates for different types of work, and reporting tools which will bring this data together in way that allows you to report on and learn from all your evaluation activity. Together, this enables organisations to use data-driven methods to evaluate their work and measure progress against their ambitions by gathering feedback from the people who experience their work.

Consistent and considered evaluation with the Toolkit will ensure organisations have data and reports at their fingertips, to help them demonstrate how the work they produce contributes to their stated ambitions, as well as evidencing they have an improvement process which is informed by feedback and data.

This Evaluation Guide is written for ACE-funded organisations aiming to carry out quality evaluation as described in the A&Q Investment Principle, with the intention of meeting funding requirements and improving decision making and advocacy through the use of data and insight.

2. How should I use the Toolkit?

The Toolkit provides a collection of tools which together supports comprehensive quality evaluation. This guide contains advice and suggestions on how to approach evaluation using the tools available in Toolkit.

However, you don’t have to use every tool available to the fullest for the Toolkit to function; you are able to pick and choose which areas you want to focus your time and resources on.

So, what should inform your decision about where to focus on?

If you are an ACE-funded organisation, you will most likely have made some commitment to the Investment Principles as part of your application process, including Ambition & Quality.

If you are an NPO specifically you will have completed an Investment Principles Plan (IP Plan) which outlines specific actions and priorities which will need to be delivered.

In reviewing your commitments, or your IP Plan, your organisation will need to decide which aspects of quality evaluation you should be focussing on and which elements of the Toolkit you should be using to deliver against those commitments. Some examples of areas where you might place greater or lesser focus are:

- Describing what quality means for you and what you use to measure it

- Investing in customising your surveys to capture feedback specific to your organisation

- Surveying different groups of people both inside and outside your organisation

- Involving your board in the evaluation process – setting evaluation goals and using evaluation outputs

If you are struggling to make the connection between your agreed actions and the Toolkit, and you would like help in figuring out what you need to do, please get in touch. If you do, it would help us tremendously if you could share your agreed A&Q actions with us so we are better able to support you.

3. Dimension selection & Articulating Ambitions

The Toolkit’s Articulating Ambitions process is there to support your organisation in selecting your own dimensions to use as quality and outcome metrics. The overarching idea is that by picking dimensions that reflect your overall ambitions and delivery plans, the Toolkit can provide you with insight and analysis that will be truly valuable to you and colleagues.

Given we are at the start of the funding period, there is a lot for organisations to figure out. Therefore, you might not feel ready to complete the full articulating ambitions process yet. To ensure that the Toolkit remains as flexible as possible for you to use, we can suggest a few alternative approaches which will still ensure you can get access to valuable insight.

For example, you may choose to use the Core dimensions (see below), which were used consistently by hundreds of arts and cultural organisations during the 2019-23 Toolkit project. Or you might wish to start by focussing on a small set of dimensions you select yourself as you learn what works best for you in terms of your immediate insight needs. But, if you want to dig a bit deeper, the Toolkit has been redesigned to make it easier for you to pick a larger set of dimensions, reflecting your organisation’s articulated ambitions, that you then pick from as you evaluate different types of events and activities.

We go into more detail on the different options below. For all the approaches which involve choosing your own dimensions, you will work with the Dimensions Framework.

Core dimensions:

| Dimension Name | Dimension Statement |

| Captivation | It was absorbing and held my attention |

| Thought-Provoking (previously Challenge) | It was thought-provoking |

| Concept | It was an interesting idea |

| Distinctiveness | It was different to things I’ve experienced before |

| Relevance | It had something to say about modern society |

| Rigour | It was well thought through and put together |

| Excellence (self & peer only) | It was one of the best examples of its type that I have experienced |

| Originality (self & peer only) | It was ground-breaking |

| Risk (self & peer only) | The artists/creators really challenged themselves with this work |

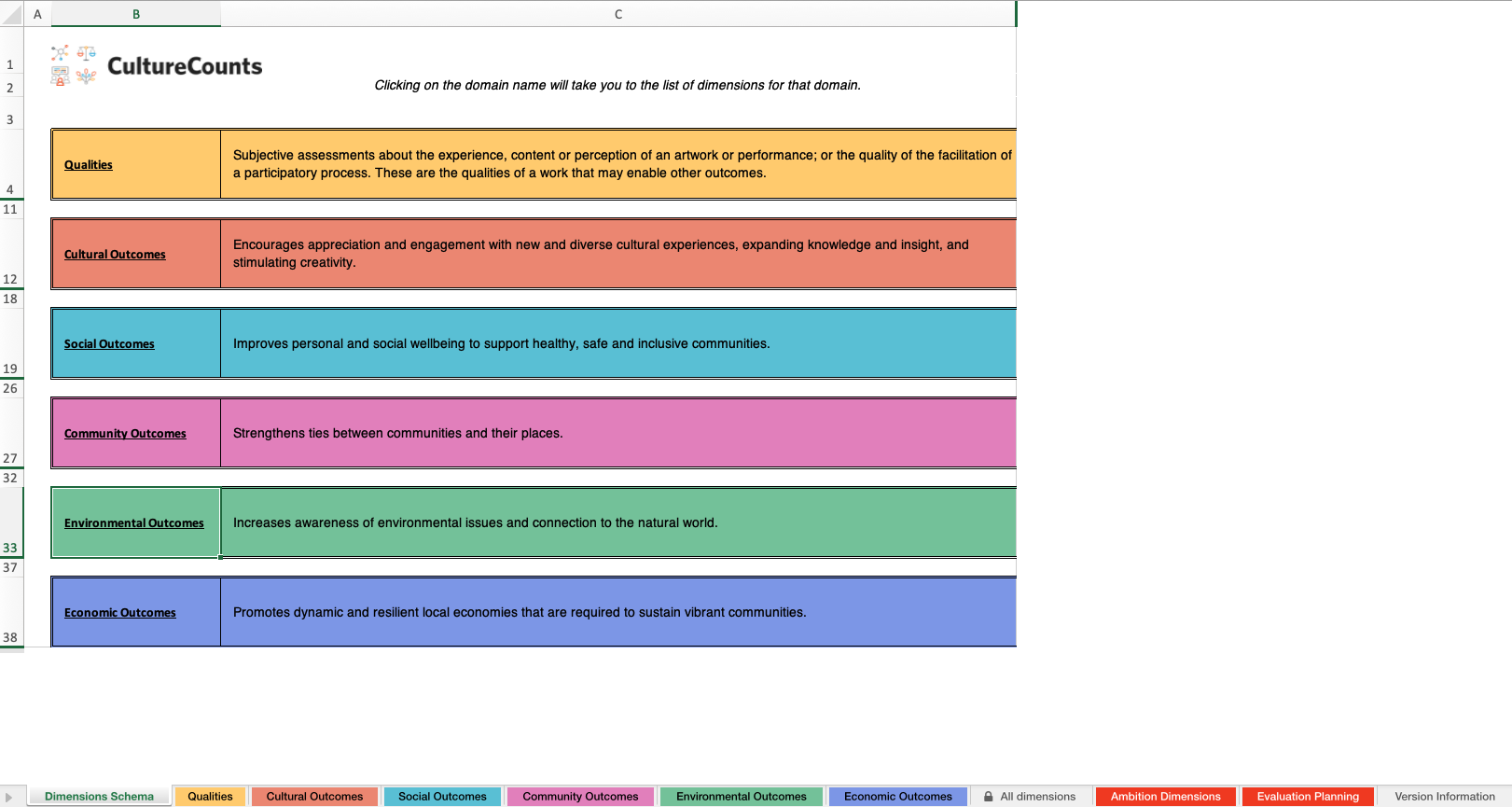

3.1. The Dimensions Framework

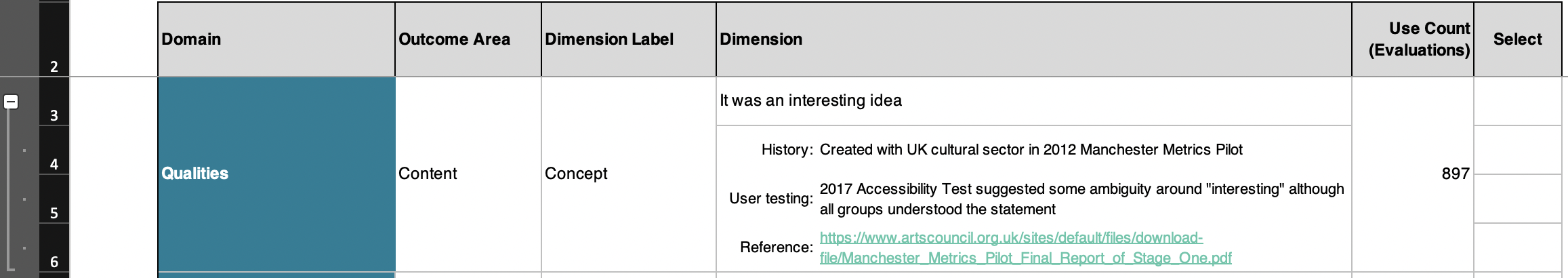

To select your own set of dimensions you will work with the Toolkit Dimensions Framework to review and select from the available dimensions. The framework helps to do this by organising the dimensions into groups, making it easier for you to find ones that are relevant to your work.

When browsing the dimensions in the framework, you can click on the ‘+’ at the left hand side of each table to expand the dimension and see history, user testing and reference information, if it is available.

To select a dimension, put a ‘Yes’ in the box next to the dimension you would like to use.

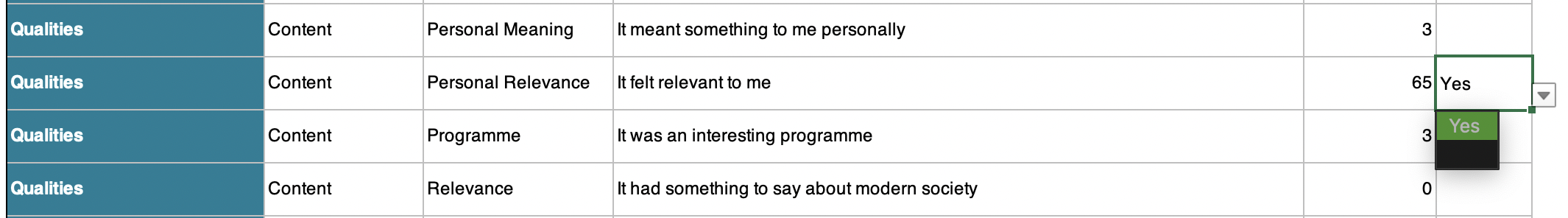

Every dimension you select will be listed on the ‘Ambition Dimensions’ sheet.

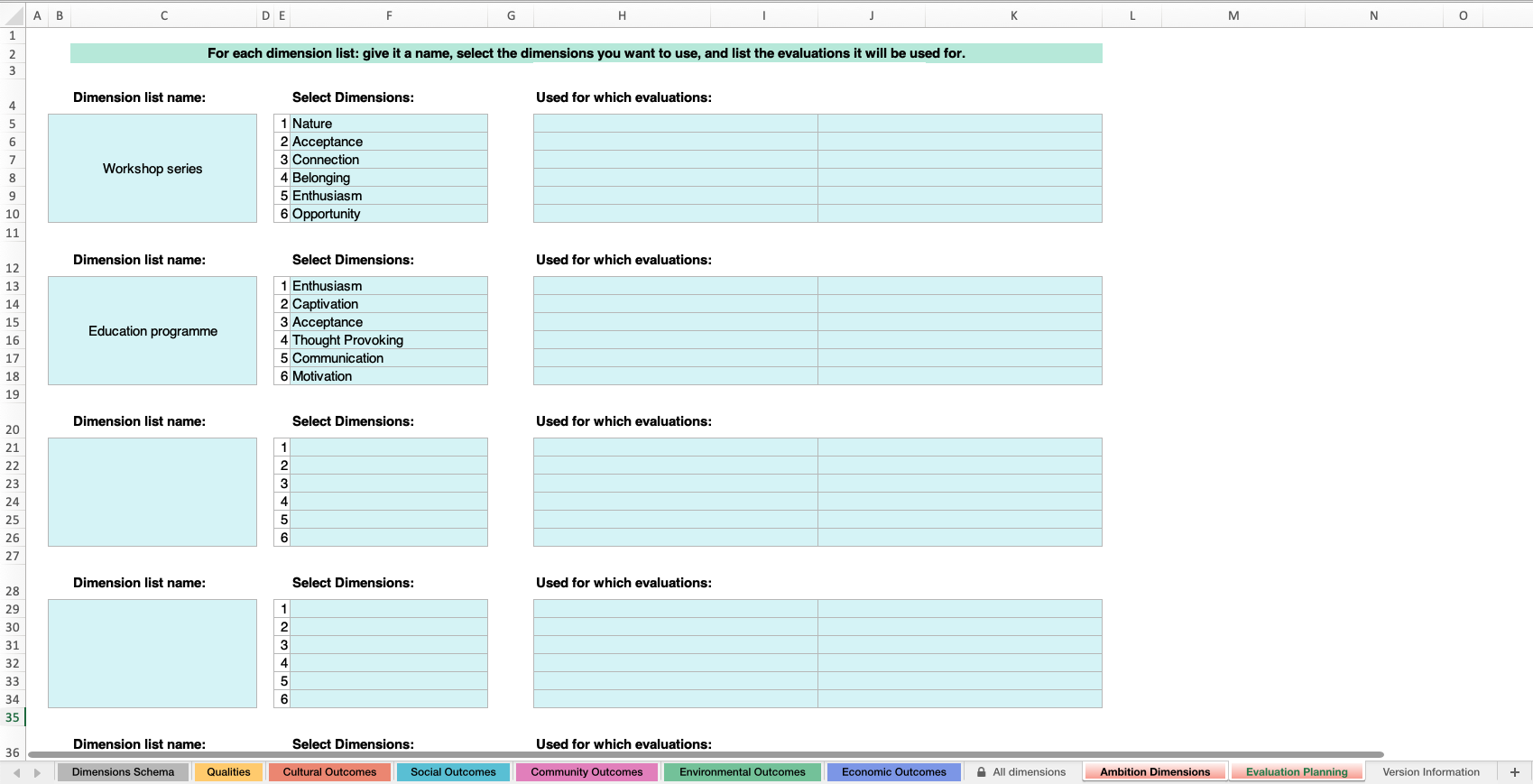

The framework also has an ‘Evaluation Planning’ sheet, which you can use to group dimensions together based on the types of work you intend to use them for evaluating, and for listing specific projects you intend to use that set of dimensions for.

Read our guide on the development of the Dimensions Framework for more information.

From the linked webpage, navigate to the menu on the left hand side and select ‘Download the Dimensions Framework’. From here, you can download an Excel copy of the Dimensions Framework, so that you can progress with articulating your ambitions.

3.2. Dimension selection approaches

3.2.1. Using templates

The simplest way to include dimensions in your evaluations is to use the evaluation templates which come with dimensions included.

Dependent on the template selected, the dimensions included are either the Core dimensions, or those that are most frequently used. Either way, you are provided with a large body of data to benchmark against.

However, without choosing your own dimensions, this doesn’t provide any meaningful links between your organisation’s ambitions and the quality and outcome metrics being measured.

We talk about evaluation template choices further in the ‘Deciding which evaluation templates you will use’ section of this guide.

3.2.2. Choosing a single set of dimensions

What you need: An Impact & Insight Toolkit Dimensions Framework

This approach involves selecting a set of 4-6 dimensions which you will use in all your evaluations, regardless of the type of work that you are evaluating.

This set of dimensions might be chosen to reflect your organisation’s mission, or an agreed idea of what quality work looks like for you.

4-6 dimensions are recommended because it is a good number for people completing the survey to respond to. You can choose fewer or more if you would like; however, it could impact upon survey completion rates or the quality of the data.

3.3.3. Choosing multiple sets of dimensions

What you need: An Impact & Insight Toolkit Dimensions Framework

This approach involves selecting a set of 4-6 dimensions for each of the different types of work you want to evaluate, thinking about what the qualities and outcomes are for the different types of work. When we say this, we mean having one set of dimensions for each area of your deliverables: for instance – performance work, participatory work, exhibition work, talent development work…

The sets of dimensions can overlap, so they can include some of the same dimensions if there are some shared intentions across all the types of work.

Again, you can choose more or fewer than 4-6 if you would like, with the same potential impacts as outlined above.

3.3.4. Articulating Ambitions

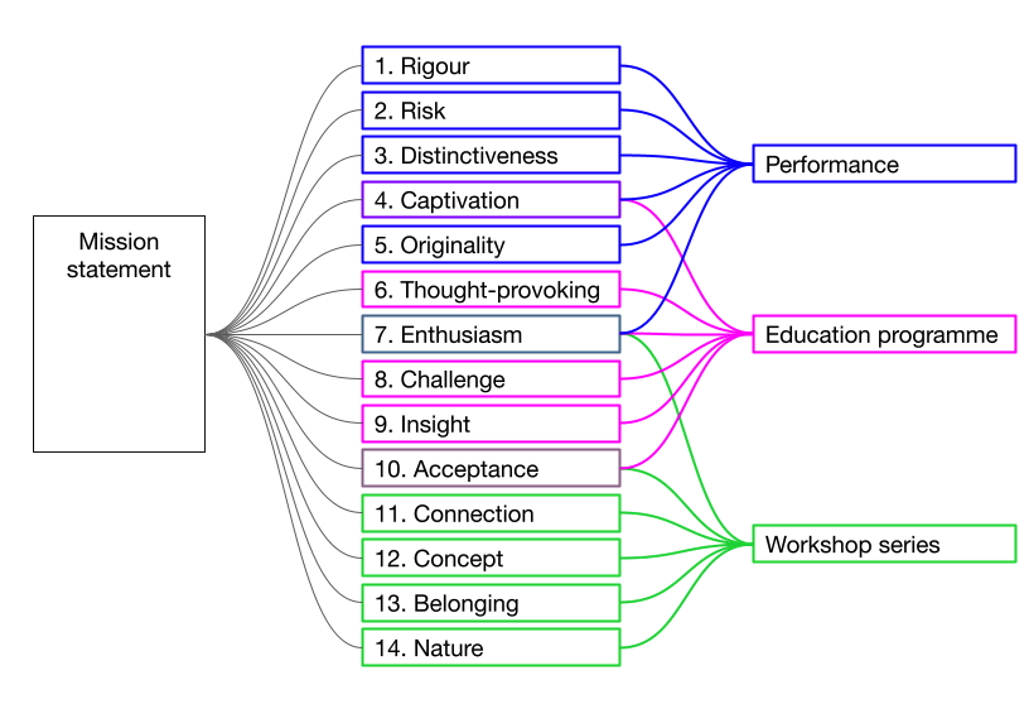

What you need: Your mission statement and an Impact & Insight Toolkit Dimensions Framework

This articulating ambitions process begins with your organisation’s mission statement, rather than the different types of work that you produce, and choosing your dimensions to reflect your mission statement.

The advantage of this approach is that, throughout all your evaluation activity, your organisation will be using quality and outcome measures that you have selected to help you to understand how far you are delivering what you are committed to. In turn, this helps you to use Toolkit evaluations to identify what you need to improve on.

To complete this process there are two steps:

- Select a larger set of dimensions which are a good reflection of your organisation’s overall mission. We would recommend selecting 10-15 dimensions in total to cover your organisation’s ambitions.

- From this larger set that represents your organisation’s mission, select the 4-6 dimensions for the different types of work your organisation delivers.

The result of this process is a mapping from your mission statement to the types of work your organisation delivers, with a set of metrics to be used as indicators of quality for each. This is visualised in the example below:

We will use a ‘Dimension List’ tool in the Culture Counts platform to save your chosen sets of dimensions, making it quick and easy for the person managing the evaluation process to create evaluations using the correct dimensions.

Here are some important things to bear in mind when choosing dimensions for your different types of work:

- You can use the same dimension across different types of work.

- Each type of work can be as similar or as different as you like.

- Not every dimension from the articulated ambitions must be chosen if you don’t feel they are appropriate. However, it is worth feeding this back to your colleagues that defined the ambitions for the organisation.

3.3. Engaging the Board

Due to the emphasis on board accountability in the latest NPO/IPSO funding round, if you are an NPO/IPSO it is important for the board to have either ownership or oversight of the evaluation process. For example, this might involve the board selecting the appropriate works to evaluate; the dimensions that best reflect your organisation’s mission; or the respondent categories to gather feedback from.

Below, we give some different options for ways that your organisation could bring the board into the process of selecting dimensions.

Approaches to involving the board:

- Include which dimensions are being used in board papers so they are visible to the board

- Ask the board for review and sign-off of selected dimensions

- Involve the board in the selection of dimensions

3.4. Example Scenarios

Below, we give some example scenarios which draw on the options set out above. All these scenarios are perfectly valid in terms of use of the Toolkit.

3.4.1. Scenario 1

A community arts organisation has received National Lottery Project Grant funding. As part of their application to ACE, they said that they would put resources into collecting feedback from people who experience their work as per the Ambition and Quality Investment Principle. The founder of the organisation is the Culture Counts Lead User and is solely responsible for the quality evaluation process. As they have limited resources available, they think that it will be difficult to get their colleagues together to do a full Articulating Ambitions process. They decide to use the evaluation templates provided as they are, and to focus on evaluating as much of their work as possible using the dimensions that are automatically included. In time, and once they are more familiar with the Toolkit, they intend to involve their production/artistic team in selecting dimensions.

3.4.2. Scenario 2

In their Investment Principles (IP) Plan, an NPO has stated that they will better describe their ambitions for their performance work. The board recognises that this should involve selecting their own quality metrics, and they also recognise that their staff who work with the Toolkit day-to-day have a much better understanding of the Toolkit and the dimensions. Therefore, they ask the artistic team to work together to select a set of dimensions which they think are appropriate for their performance work, and to report back to the board with their choices so they can review and sign off.

3.4.3. Scenario 3

In their IP Plan, an NPO has said they will test how all their creative and cultural work, both its public and non-public aspects, clearly links to their aims and ambitions. They recognise that the aims of the organisation are owned by the board, and that they should at least be involved in making the link between the aims of the organisation, the work it produces, and what dimensions could be used to measure whether the aims are being met. Having spent 6 months getting their basic quality evaluation processes set up, the board then starts to work with the executive team to complete a full Articulating Ambitions process: choosing dimensions which link to their overall mission, and mapping these dimensions to the different works that they produce.

4. Evaluation planning & preparation

This step is about planning what you want to evaluate in the coming months and making sure that the person responsible for running the evaluations has the information they need.

It ideally involves cooperation between the Culture Counts Lead User and the senior management team of your organisation. The Lead User and senior management team might meet occasionally to review upcoming works, update existing evaluation plans and to inform decisions about what to plan next.

4.1. Deciding which works to evaluate

What you need: Access to the Culture Counts platform and your organisation’s articulated ambitions.

In an ideal world, you could evaluate everything your organisation produces. However, setting up evaluations, configuring and customising surveys, sending out survey links, running face-to-face interviews and checking the data all requires time and resource.

Because of this, most organisations won’t be able to do a full evaluation of every work they produce. But that’s okay. This step involves selecting the works you will evaluate so you can get the most out of the resources available to you.

When planning which works to evaluate, it’s important to consider the following:

- Begin by prioritising the evaluation of work which reflects the core of your organisation’s mission.

- Try to strike a balance between breadth and depth of evaluation. This means evaluate a variety of different types of work, as well as evaluating a few works of the same type to get access to more interesting analytical comparisons.

- Be ambitious about how much evaluation activity you plan to carry out. If it becomes clear that it requires more time than you have available, it is always easier to cut back than to look back and wish you had done more.

4.2. Deciding which evaluation templates you will use

The Toolkit comes with a set of evaluation templates which make it easy for you to set up the surveys you need. To make the evaluation process easier, we recommend starting with a template, as it automatically does 95% of the work needed to create and configure an evaluation. There might be a few tweaks necessary to complete that last 5%, but having more time to spend on customisation will result in better surveys and better data.

Evaluation templates contain surveys for all potential respondent groups and come with a set of appropriate and well-designed questions.

You can easily include your chosen dimensions across all the surveys included in the template at the click of a button. Using the templates allows you to access a comprehensive Ambition Progress Report for your evaluated works and your organisation as a whole.

At the time of writing, there are templates for:

- Event

- Exhibition

- Participatory

The templates include a set of dimensions in each of the surveys, which will be replaced if you decide to use one of your own Dimension Lists.

The Event and Exhibition templates include:

- Captivation: It was absorbing and held my attention

- Thought Provoking: It was thought provoking

- Concept: It was an interesting idea

- Distinctiveness: It was different from things I’ve experienced before

- Relevance: It had something to say about modern society

- Rigour: It was well thought through and put together

The Participatory template includes:

- Enjoyment: I had a good time

- Experimenting: I felt comfortable trying new things

- Motivation: I feel motivated to do more creative things in the future

- Confidence: It made me feel more confident about doing new things

- Artistic Skills: It helped improve my artistic skills

Please know that these will likely change over time, in response to user requests and research. A full description of the templates can be found in our Evaluation Templates guidance.

Alternatively, you can preview the templates inside the platform. We recommend checking the templates’ content to help decide if they are a good match for the works you plan to evaluate.

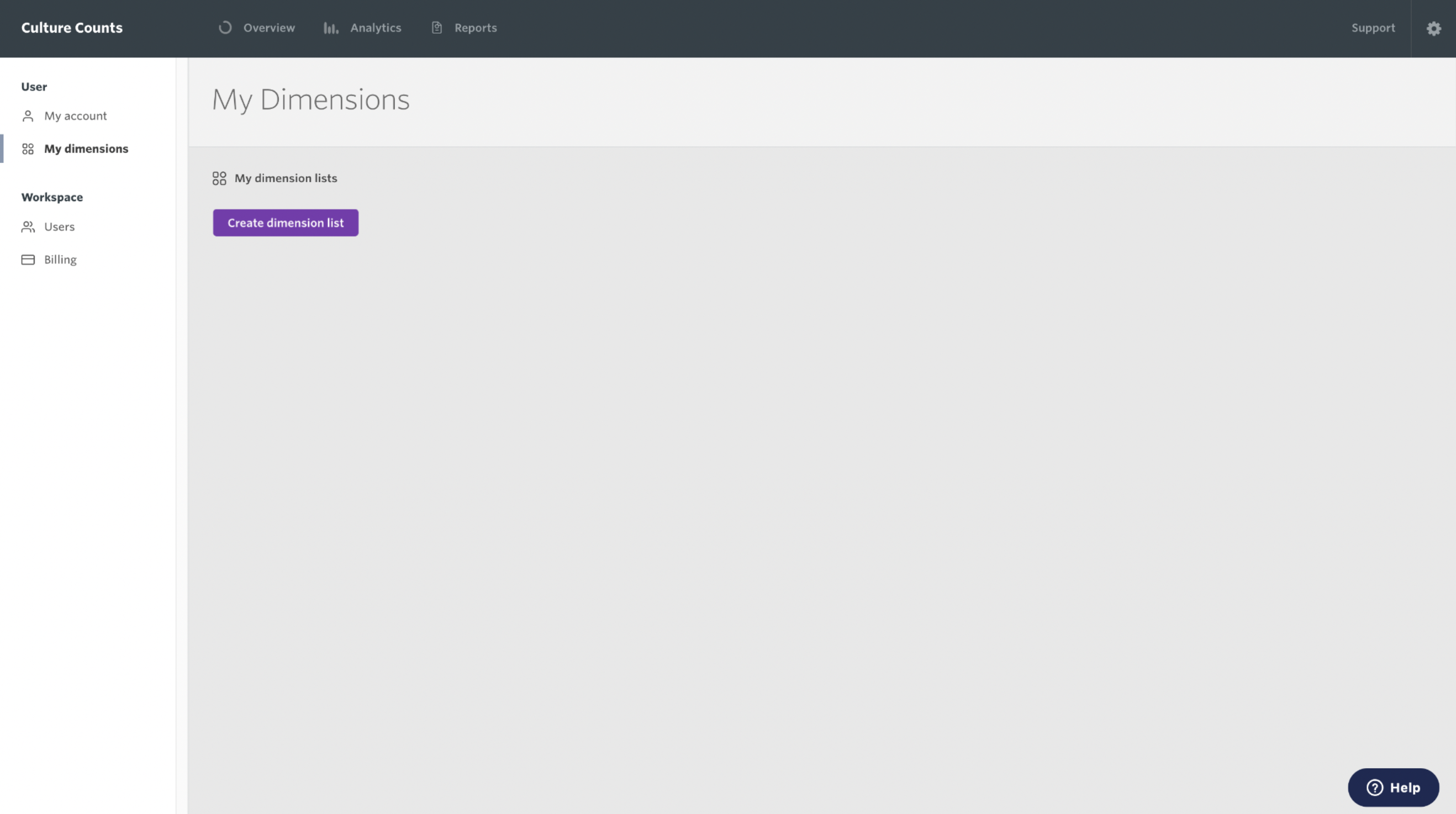

4.3. Creating dimension lists in the Culture Counts Platform

Who does this: Culture Counts Lead User

What you need: Access to the Culture Counts platform and your organisation’s articulated ambitions

The sets of dimensions you chose in the earlier step can be entered into the Culture Counts platform in the form of a Dimension List. A Dimension List in the platform is a predefined set of dimensions which can be easily inserted into evaluations when you create them.

It is recommended that the process of creating and managing the dimension lists is the responsibility of the Lead User.

You should create one list for your organisation’s overall ambitions, and then one list for each type of work that your organisation plans to evaluate.

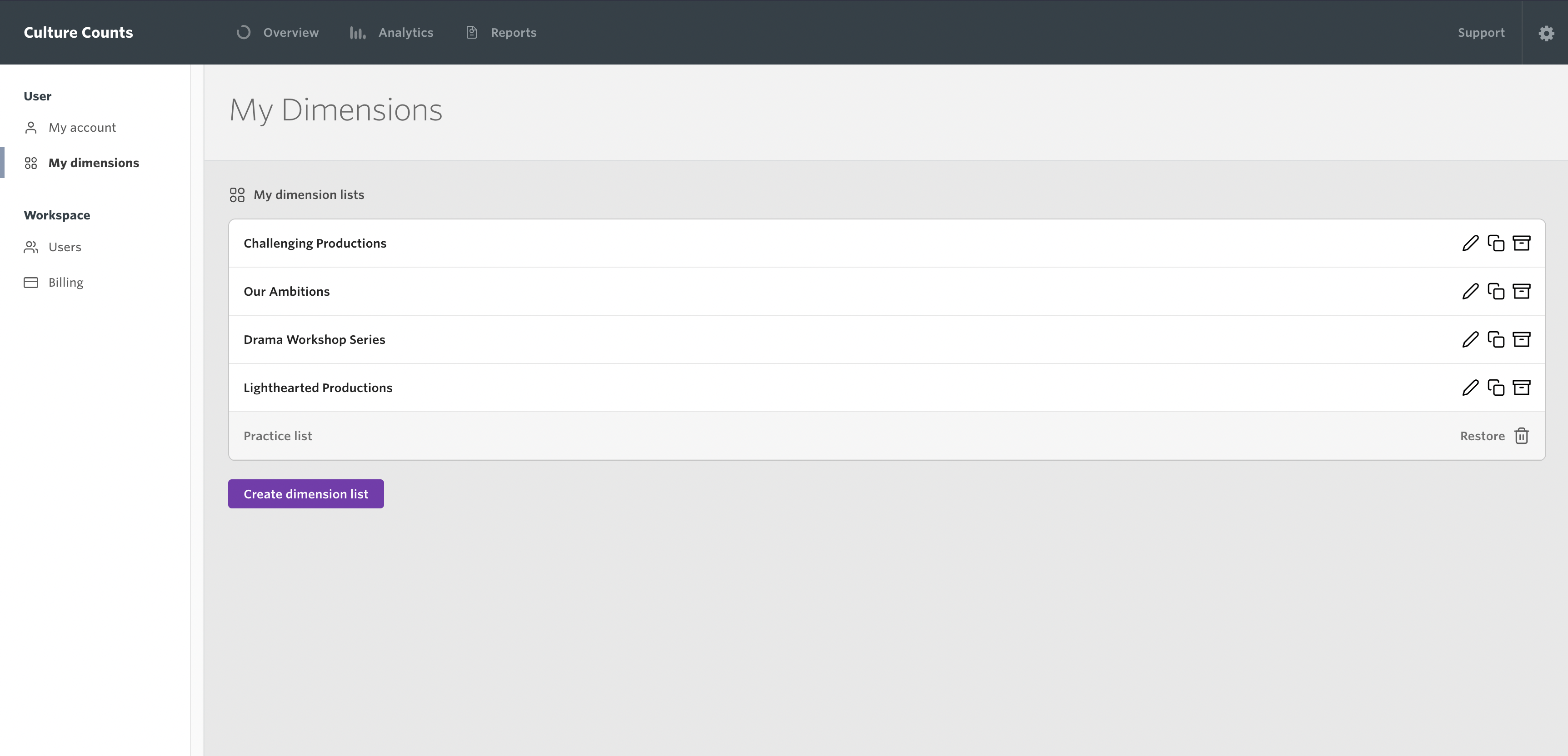

For example, you might create dimension lists for the work types ‘Participatory Workshops’, ‘Light-hearted Productions’ and ‘Challenging Productions’, and another list for your articulated ambitions – 4 in total.

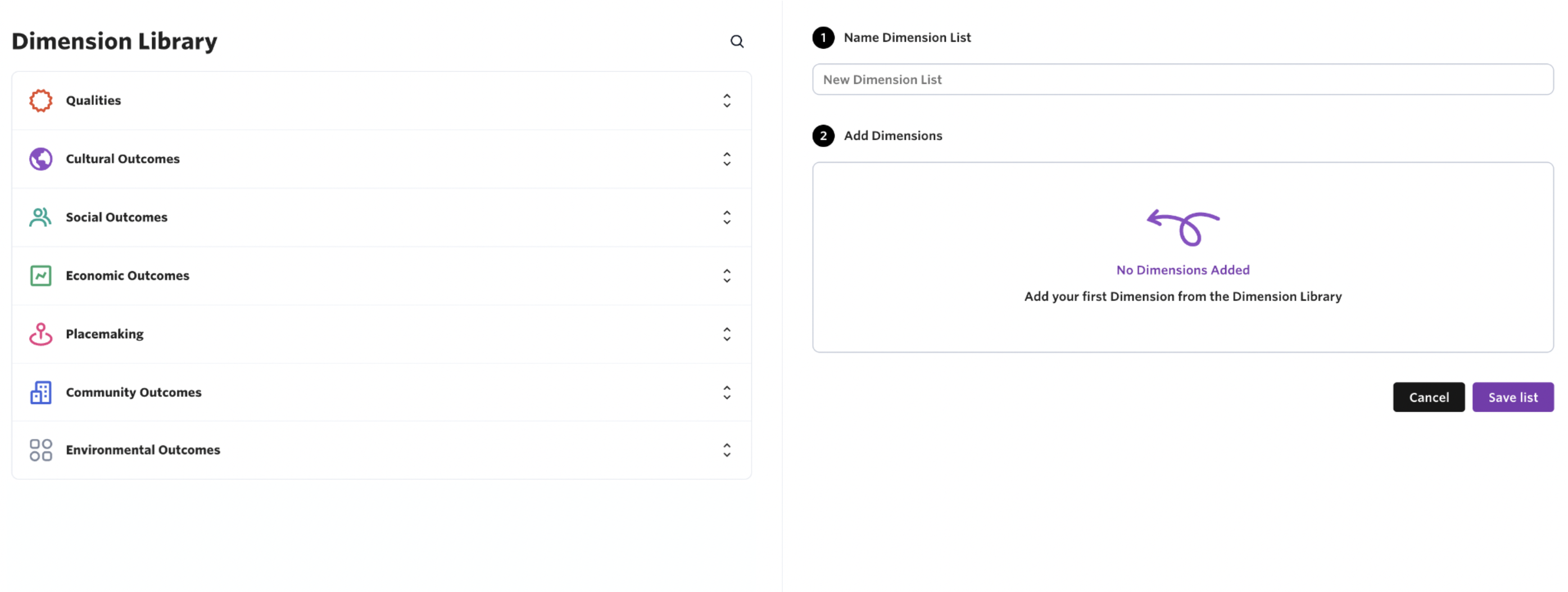

To do this, go to the Settings section of your Culture Counts account, where you can find the ‘My dimensions’ page. This page allows you to view and create dimension lists.

Clicking ‘Create dimension list’ will take you to the Dimension Selector tool where you can add the dimensions to the list and give it a name.

Upon completion of this step your organisation will have a set of dimension lists which anyone from your organisation will have access to, and which they can use to quickly populate evaluations they create.

For more details, please refer to our step-by-step dimension lists and the dimension selector guidance.

4.4. Deciding which groups of people you will survey

Below, we list the different types of surveys you can use in the Toolkit. Using all the surveys together allows you to build a complete picture of the perceptions people have about the work you produce and the impact that it has on them.

Surveying the public is required if you are using the Toolkit to demonstrate your commitment to Ambition & Quality, or if you would like to access the Ambition Progress Reports.

Taking into account the resources you have available for running evaluations, decide which of the surveys you will be running.

4.4.1. Public Survey – Minimum standard

Survey members of the public who experience your work. You can send out digital surveys via email or social media, or survey people in person using tablets, whether you have Internet access or not.

4.4.2. Self Prior Survey – Optional

Survey your own staff before the work you are evaluating takes place. This captures their expectations for the work. You can send this survey to anyone in your organisation or just people directly involved in creating, producing or planning the work.

4.4.3. Peer Survey – Optional

Survey peers you have invited to experience and review your work. You can invite as many or as few peers as you like. A peer is someone whose professional opinion you value and who has some knowledge of your organisation, artform or subject matter. However, they do not have to be experts.

4.4.4. Self Post Survey – Optional

Survey your own staff after the work you are evaluating takes place. You can send this survey to anyone in your organisation. If you also carried out a Self Prior survey, it is recommended to send this survey to the same people. The completion of this survey encourages staff reflection on the work, where it succeeded and where there may be areas for future development.

5. Evaluation setup

What you need: Access to the Culture Counts platform and your organisation’s evaluation plans

Once your evaluations have been planned and you know which works you are evaluating and which dimensions you are using, you are now ready to create the evaluations in the Culture Counts platform.

Having already carried out the ‘Dimension selection & Articulating Ambitions’ step and ‘Evaluation planning & preparation’ step, this one should be fairly straightforward.

5.1. Creating Evaluations

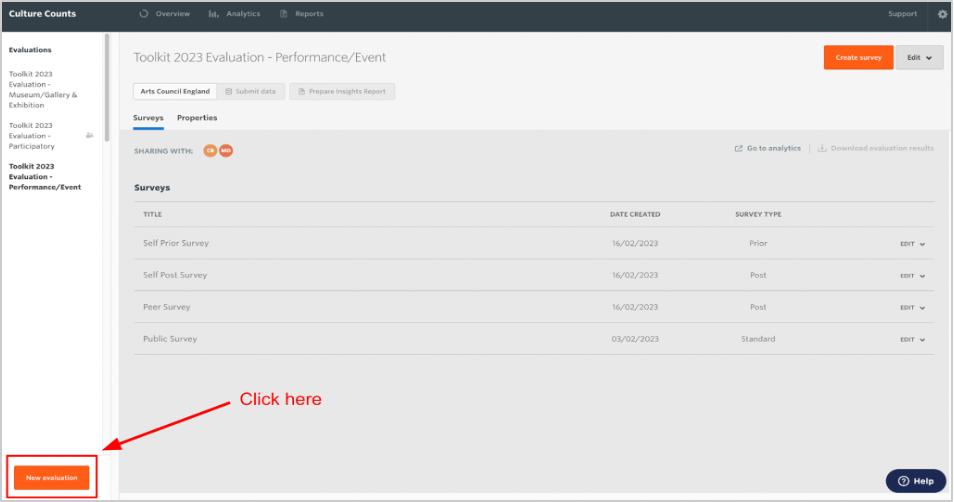

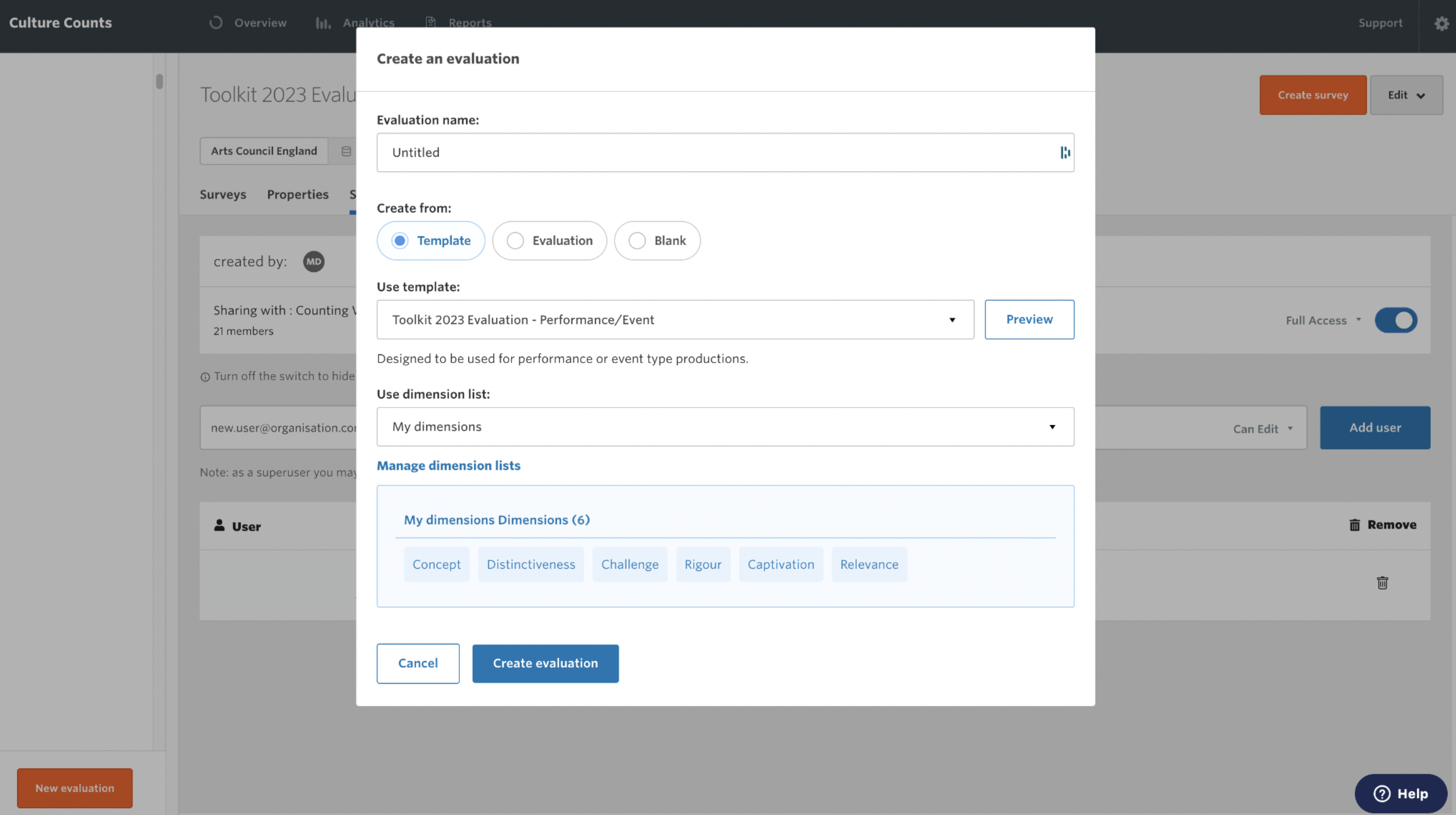

For each work your organisation has planned to evaluate, first click the ‘New evaluation’ button on the Culture Counts dashboard. Doing so will bring up the ‘Create an evaluation’ window.

The ‘Create an evaluation’ window gives you options to create a new evaluation from a template, from an existing evaluation (make a copy) or to create a blank evaluation. When using Culture Counts to evaluate an artistic or cultural work, we recommend selecting one of the available templates. To read about copying from an evaluation or creating a blank evaluation, please refer to our step-by-step Platform Basics guidance.

Once you have selected a template from the dropdown menu, choose a Dimensions List to include in your evaluation. If you don’t have any dimension lists, please refer to our step-by-step dimension lists and the dimension selector guidance.

The dimensions you select will be placed into the relevant sections of all the surveys within the evaluation template, saving you from doing this manually. This means the dimensions will populate the public, peer, self prior and self post surveys automatically.

Finally, don’t forget to enter the name of your evaluation in the ‘Evaluation name’ field. An evaluation is generally named after the specific event, project or work that you want to measure the impact of.

5.2. Customising Surveys

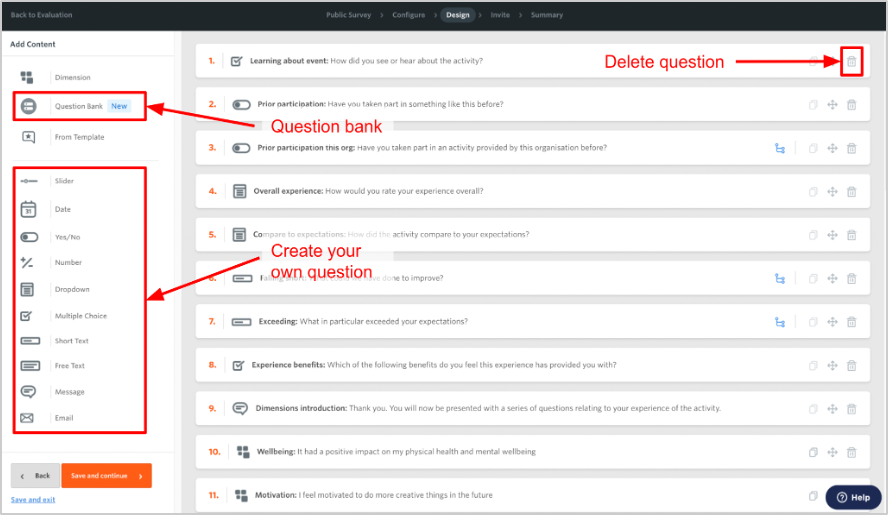

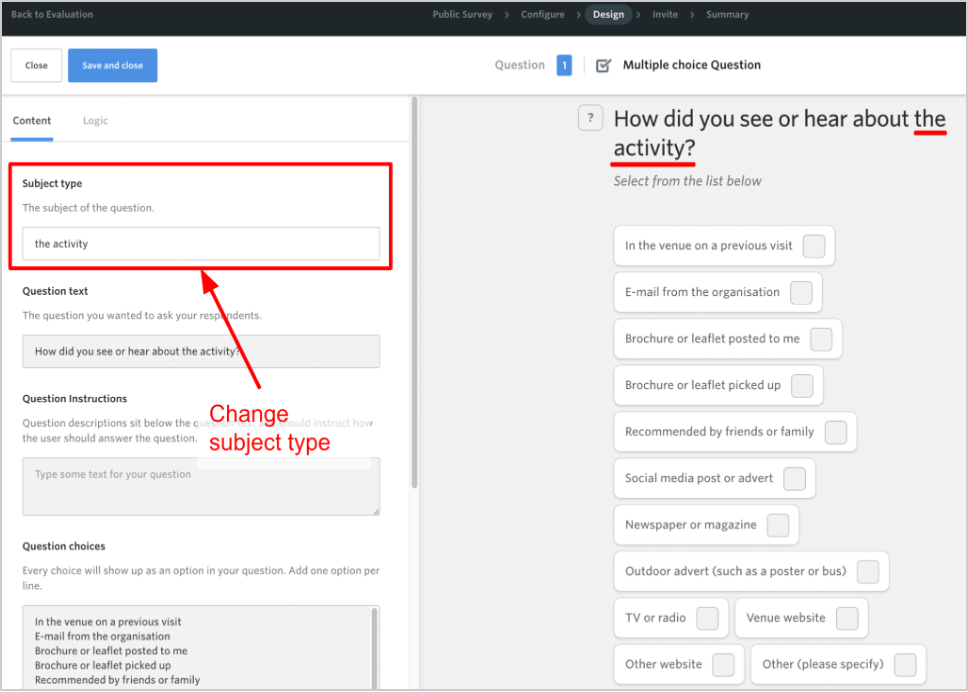

To customise your surveys, click on a survey you want to customise and navigate to the ‘Design’ tab.

If you have created an evaluation from a template and try to make changes in the ‘Design’ tab, you may notice that most or all of the question text cannot be changed. But don’t worry! You are still free to customise your evaluations as much as you like, whether you create from a template or not.

You can customise your evaluations in a few ways:

- Deleting questions: This one is pretty obvious, but if you don’t like a question at all you can simply delete it from the survey by clicking on the rubbish bin icon.

- Creating your own questions: On the survey ‘Design’ page is a set of content types that allow you to create your own survey questions from scratch using your own wording.

- Choosing a ready-made question: Also on the survey ‘Design’ page is a Question Bank. The Question Bank has a range of different categories, each of which contains a set of ready-made survey questions you can choose from. Questions from the question bank mostly have fixed text. For more information, please refer to our Question Bank guidance.

- Changing the subject of a question: Some of the questions refer to ‘the event’, ‘the activity’ or ‘the exhibition’. This is the subject of the question and can be changed to whatever you like, for example, the specific name of the event.

However, you are unable to edit the full question wording for questions that come with the template. This is because these questions are used to create the Ambition Progress Reports. If you wish to use a variant of a template question, you can delete the existing question and create your own with your preferred wording.

For detailed descriptions on all of the survey question options you can choose from and how to use them, refer to our Platform Basics guidance.

5.3. Adding Evaluation Properties

Evaluation properties are the metadata or ‘tags’ that are associated with your evaluation’s data. Add evaluation properties by clicking on an evaluation in the Culture Counts dashboard and clicking on the ‘Properties’ button. The different types of properties you can use and their benefits are outlined below.

| Properties | What is it | Benefits | Example |

| Description | This is about describing the type of work that is being evaluated. It includes the ability to tag your work with artform(s). | This enables us to provide artform specific benchmarks in our progress reporting. | Type: Festival

Artform: Combined Arts

Specific artforms: Carnival & Public Celebration |

| Location | This is about describing the physical location(s) for the work or whether the work takes place online or is touring. | The online and touring tags enable us to produce and use more accurate benchmarks. | Online: No

Address: Lever St, Manchester, M1 1FN

Touring: No

|

| Attendance | This is about giving a known or estimated attendance/audience figure for a piece of work. | This helps with calculating the margin of error. Giving an attendance number will improve the margin of error accuracy. | Attendance: 250 |

| Duration | This is about giving a start and end date for the work. If it is a single day event the start and end date can be the same day. | This helps us to accurately report when given works take place. | Start Date: 20th April 2023

End Date: 27th April 2023 |

6. Carrying out your evaluations

Who does this: Culture Counts Lead User

What you need: Access to the Culture Counts platform and your organisation’s evaluation plans

The process of carrying out your evaluations is about sending surveys to the right people at the right time, and therefore ensuring that your organisation captures the feedback it needs to understand how its work is perceived.

In explaining this step, we separate the process of surveying the public from the process of surveying self assessors and peer reviewers. This is because the process looks different for different respondent types, and the challenges around them are different.

With the public, the challenge is typically in ensuring that enough people see and complete the surveys so you can collect a representative sample.

With peer reviewers, the challenge is typically in finding a good group of peers for each evaluation that are happy to give their time to experience the work and to provide a review.

6.1. Surveying the public

In preparing for this step, it is a good idea to consider our Sample Size guidance which gives best practice techniques that will help you to get a more representative sample of the people who experience your work.

6.1.1. Choosing survey delivery options

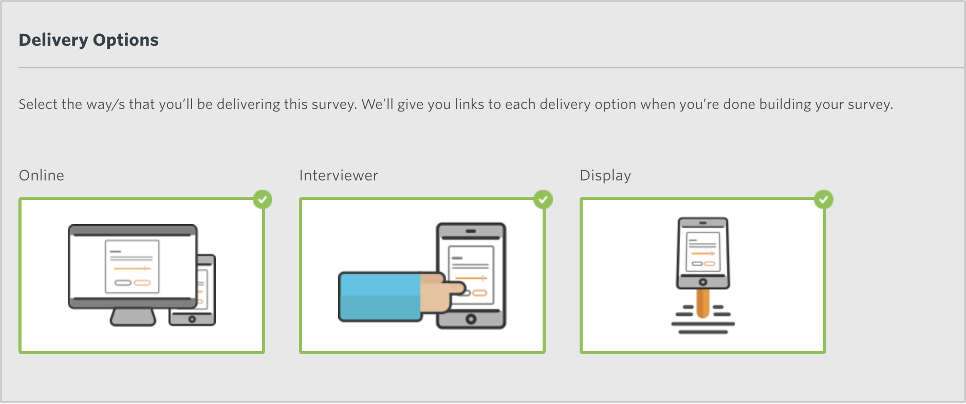

There are 3 ways to collect surveys from public respondents:

- Online – This means the respondent completes the survey on their own computer or smartphone. This option can only collect 1 response per device.

- Interviewer – This means an interviewer engaging the public face-to-face using a portable device. This option can collect multiple responses per device.

- Display – This means an autonomous display situated near the work, which the public can interact with independently. This option can collect multiple responses per device.

The Online delivery type is the default option and is used the most often. However, you can use any combination, or all three, if you would like.

Specify the delivery options you plan to use on the ‘Configure’ page of the survey. At the bottom of this page you will find the ‘Delivery Options’ section where you can choose: Online, Interviewer or Display.

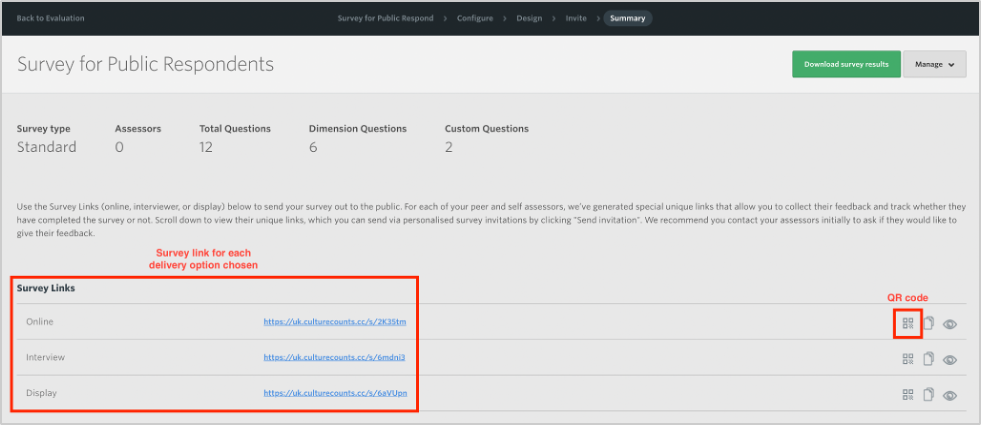

6.1.2. Distributing surveys

Begin by going to the ‘Summary’ page for the survey. On this page you can see a survey link for each delivery option that you chose on the ‘Configure’ page.

To distribute a survey via email or on social media, copy the ‘Online’ link and paste it into the email or post type. You can then send it to the people who have experienced your work or share it on social media with your audience.

When using interviewers, copy the ‘Interview’ link and open the link on the device(s) that you are using for interviews e.g., a tablet computer. Detailed instructions on how to carry out interviews can be found in our Interviewers guidance.

To collect responses on an autonomous display, copy the ‘Display’ link and open it on the device(s) that you are using e.g. a static tablet on a podium.

You can also generate QR codes for all 3 delivery types, which can be useful for free or outdoor events where you are less likely to have access to people’s email addresses. If someone scans the QR code with their smartphone it will take them to the survey, and they can complete it on their phone.

It is crucial that you use the correct link. If you use an ‘Online’ link for interviewing or display surveys, your survey responses will be overwritten. ‘Online’ surveys can only collect 1 response per device.

6.2. Surveying self assessors and peer reviewers

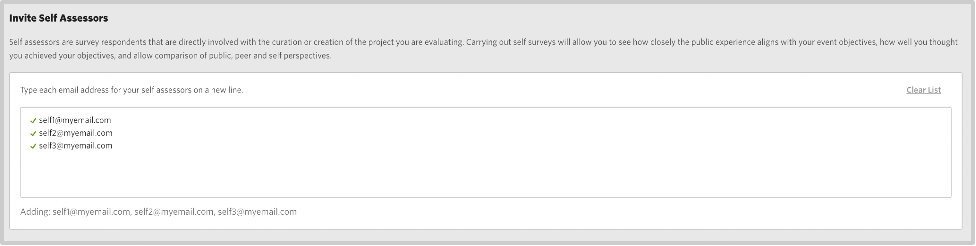

For self assessors and peer reviewers the process is a little different to the public. For these respondent types, there is a unique survey link for each person that you want to complete the survey. This means you need to specify the email addresses of the people that you would like to complete the surveys.

6.2.1. Inviting self assessors

On the ‘Invite’ page, navigate to the ‘Invite Self Assessors’ section and type the email addresses of the self assessors you want to invite. Type each email address on a new line; then click ‘Save and continue’.

6.2.2. Inviting peers from the Peer Matching Resource

The Peer Matching Resource provides Impact & Insight Toolkit users with a list of sector professionals who have registered their interest in completing peer reviews for users of the Toolkit.

The Peer Matching Resource is located on the ‘Invite’ page. Use it to browse registered peers and invite them through the Culture Counts platform. Read our step-by-step Peer Marching Resource walkthrough for detailed information.

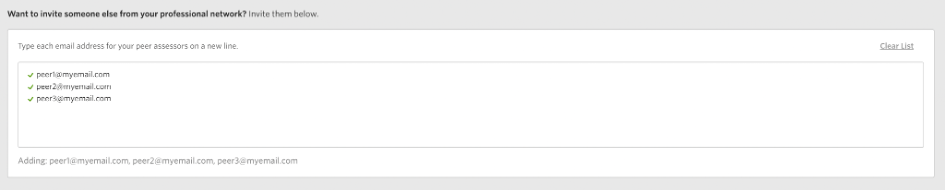

6.2.3. Inviting peers from your professional network/via email

On the ‘Invite’ page, navigate to the ‘Want to invite someone else from your professional network?’ section and type the email addresses of the peers you want to invite. Type each email address on a new line, then click ‘Save and continue’.

Note: By doing this you are not registering these individuals to the Peer Matching Resource. You are simply using them as a peer for this particular evaluation and their details will not be shared with other users.

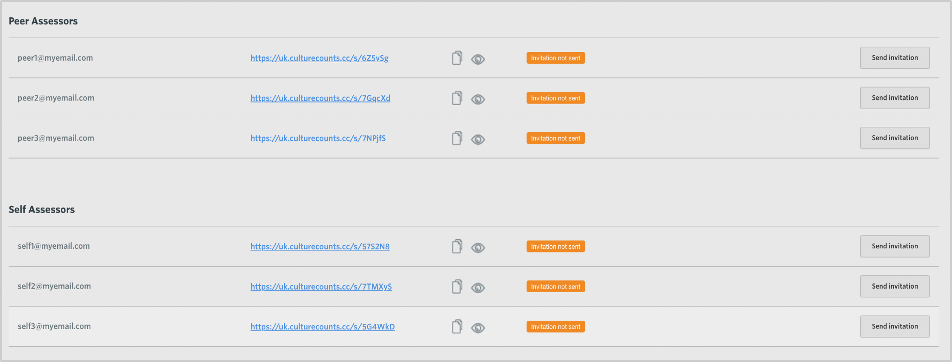

6.2.4. Sending self and peer surveys

On the ‘Summary’ page, navigate to the ‘Peer Assessors’ and ‘Self Assessors’ section, the peers and self assessors you invited in the ‘Invite’ stage will be listed. Each will be listed with a unique link. Each peer reviewer and self assessor must be sent their own unique URL.

If you used the Peer Matching Resource, the email addresses will only be displayed on the Summary page if they have accepted the invitation.

Click the ‘Send invitation’ button to send the survey to your peer through the Culture Counts platform. We recommend also copying and pasting their unique link into an email and sending it from your email account.

The ‘Invitation not sent’ tag will update to ‘Survey response pending’. Once the survey has been completed the tag will update to ‘Survey completed’.

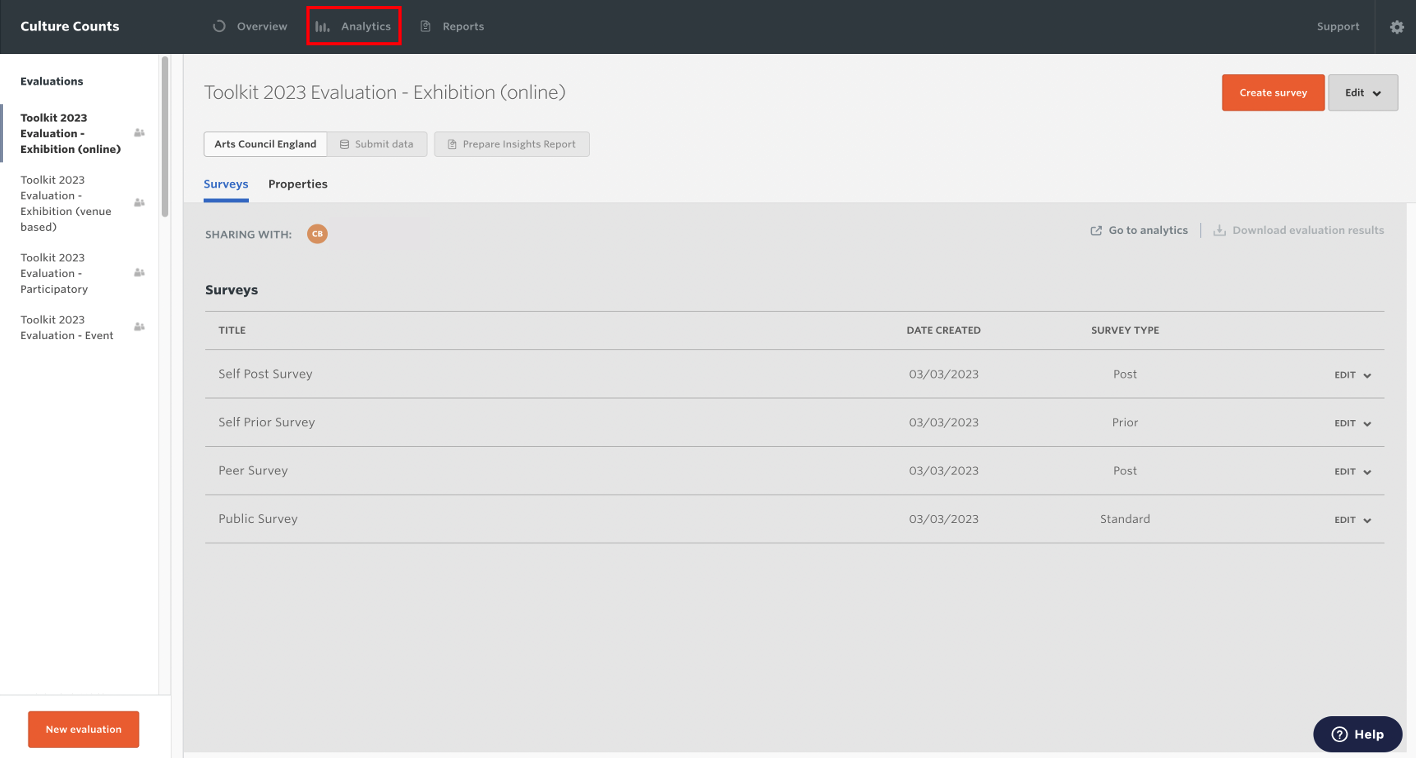

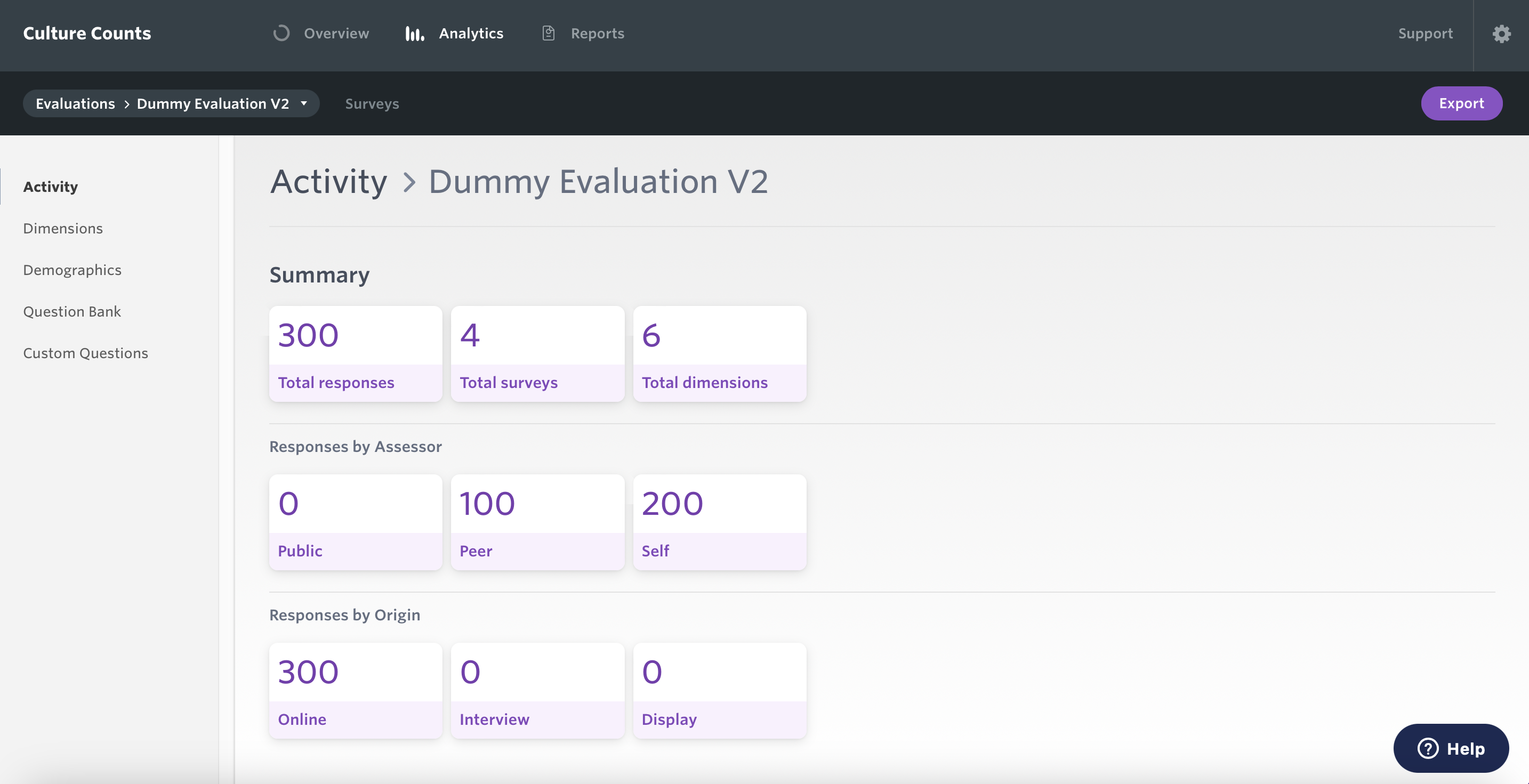

6.3. Monitoring your evaluation

You can monitor your evaluation to check that data is coming in via the in-platform dashboard. To do this, click on the ‘Analytics’ button at the top of the dashboard.

The Analytics Dashboard allows you to view activity in a Summary (including total responses) and categorised by Assessor and by Origin.

7. Insight and learning

This final part of the evaluation process is the reason why we are doing it. This is where the data that has been collected is used to help you understand how your work is perceived and how this compares to your ambitions.

There are two types of report which are available with the Toolkit:

- Dimension Benchmarking Report

- Insights Reports

In addition to creating these reports, a user can explore and download charts in the Analytics Dashboard and in the Reporting Dashboard. Users can also download the raw data as a CSV file for any of their evaluations. This allows you to do custom analysis of your data or import it to other platforms as necessary.

7.1. Reporting Dashboard 2.0

The Reporting Dashboard is built and provided by Counting What Counts. It has been built to support the Impact & Insight Toolkit project, focussing on comparing and benchmarking results received via dimension questions and viewing the demographic makeup of the people you have surveyed, when standardised demographic questions are used. All the charts and tables in the dashboard can be downloaded at the click of a button, and a simple Dimension Benchmarking Report can also be created and downloaded ‘on-demand’, according to your organisation’s schedule and needs.

Dimension Benchmarking Report

Dimension Benchmarking Reports are made up of the charts and tables on the Dimension Benchmarking page of the Reporting Dashboard and can be downloaded into a PDF for easy sharing. This downloadable report offers an overview into the dimension performance, specifically using dimension results compared with benchmarks as a metric of performance.

It has been made available with the intention of supporting conversations around whether, and to what extent, ambitions are being met across your different evaluated works.

We recommend these reports are tabled at your organisation’s board meetings.

7.2. Insights Reports

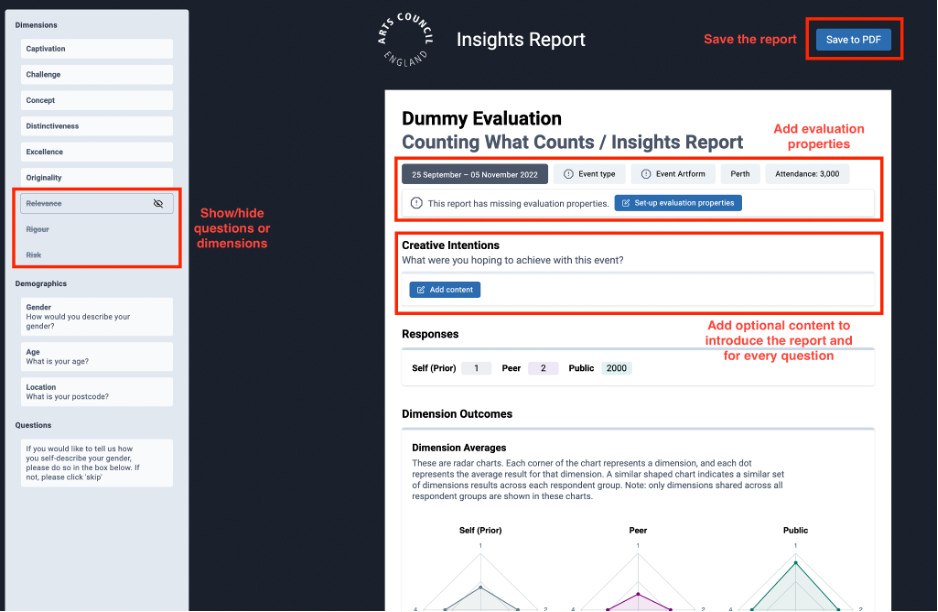

An Insights Report is a document that can be auto-generated from an evaluation in the Culture Counts platform. It presents all data collected from public surveys, including custom questions, and dimension results from self and peer surveys. Once you have finished collecting data for an evaluation, you can create an Insights Report. Please refer to our step-by-step Insights Report guidance for more detailed information.

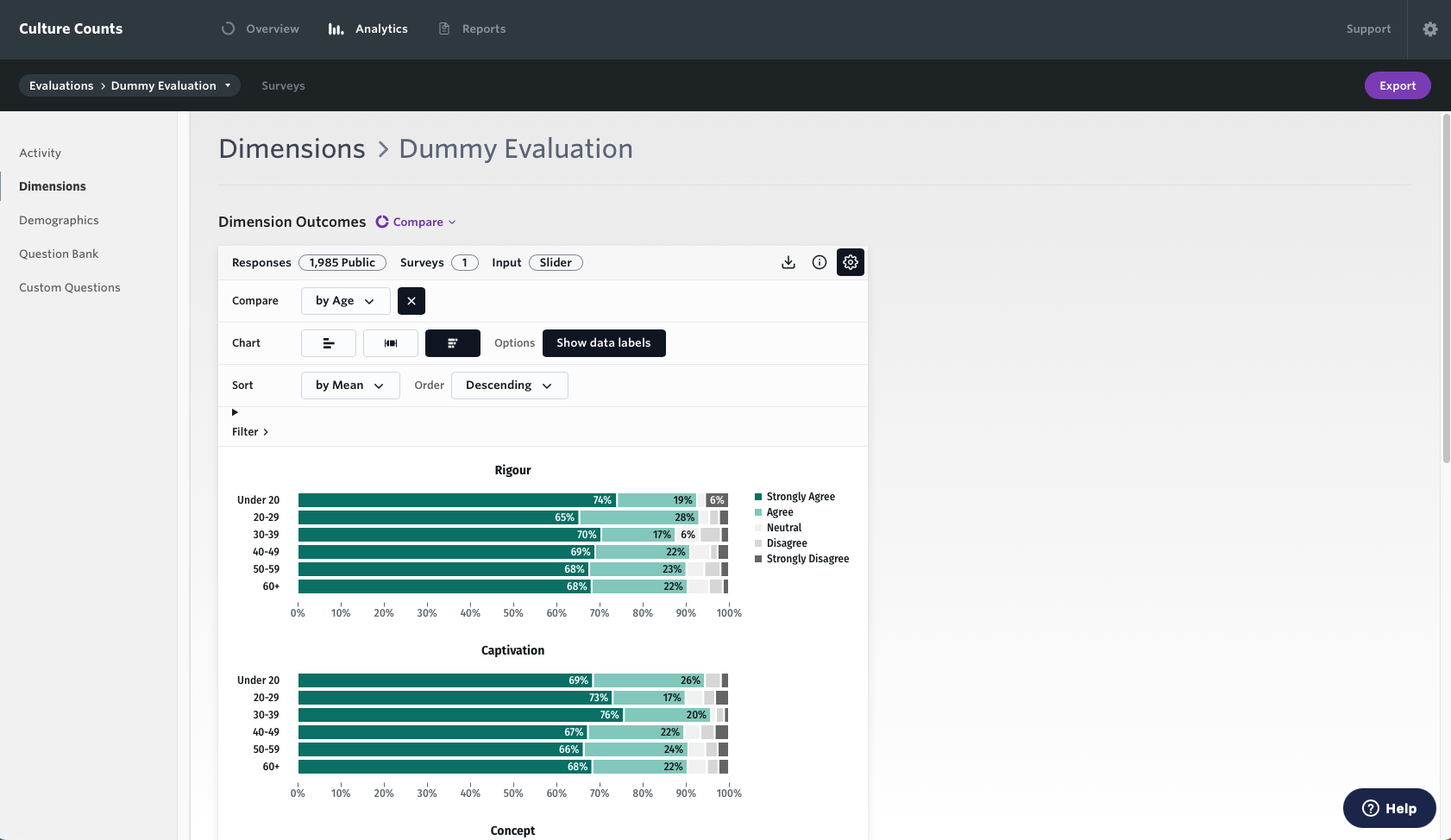

7.3. Analytics Dashboard

The Analytics Dashboard allows you to explore graphs, updated in real-time, which are displayed in the dashboard. We have already seen the Analytics dashboard in the ‘Monitoring your evaluation’ section of this guide.

You can access the Analytics Dashboard by clicking the ‘Analytics’ button on the Culture Counts home page.

The dashboard gives charts for each of the questions in the survey. To download all the charts, click on the ‘Export’ button at the top right-hand section of the page and you will have the option to: Download all charts (PDF), Download all charts (ZIP) or to Export Raw Data (CSV).

7.4. Downloading raw data

The Culture Counts platform stores exported survey data as comma-separated values files or ‘CSVs’. CSVs are a common way to store complex data and are the default format for exporting survey data across many platforms, including Culture Counts. CSVs can be opened and edited in most spreadsheet software, including Microsoft Excel and Numbers, enabling you to conduct further analysis.

Raw data can be downloaded from the Analytics Dashboard using the ‘Export’ button, or you can download a CSV file of your data for specific surveys by opening a survey and clicking on the ‘Download survey results’ button on the Summary page.